Posts Tagged 'HPE'

-

August 19, 2020

Navigating Product Name Changes for Marvell Ethernet Adapters at HPE

By Todd Owens, Field Marketing Director, Marvell

Hewlett Packard Enterprise (HPE) recently updated its product naming protocol for the Ethernet adapters in its HPE ProLiant and HPE Apollo servers. Its new approach is to include the ASIC model vendor’s name in the HPE adapter’s product name. This commonsense approach eliminates the need for model number decoder rings on the part of Channel Partners and the HPE Field team and provides everyone with more visibility and clarity. This change also aligns more with the approach HPE has been taking with their “Open” adapters on HPE ProLiant Gen10 Plus servers. All of this is good news for everyone in the server sales ecosystem, including the end user. The products’ core SKU numbers remain the same, too, which is also good.

-

August 03, 2018

Infrastructure Powerhouse: Marvell and Cavium become one!

By Todd Owens, Field Marketing Director, Marvell

Marvell’s acquisition of Cavium closed on July 6th, 2018 and the integration is well under way. Cavium becomes a wholly-owned subsidiary of Marvell. Our combined mission as Marvell is to develop and deliver semiconductor solutions that process, move, store and secure the world’s data faster and more reliably than anyone else. The combination of the two companies makes for an infrastructure powerhouse, serving a variety of customers in the Cloud/Data Center, Enterprise/Campus, Service Providers, SMB/SOHO, Industrial and Automotive industries.

For our business with HPE, the first thing you need to know is it is business as usual. The folks you engaged with on I/O and processor technology we provided to HPE before the acquisition are the same you engage with now. Marvell is a leading provider of storage technologies, including ultra-fast read channels, high performance processors and transceivers that are found in the vast majority of hard disk drive (HDD) and solid-state drive (SDD) modules used in HPE ProLiant and HPE Storage products today.

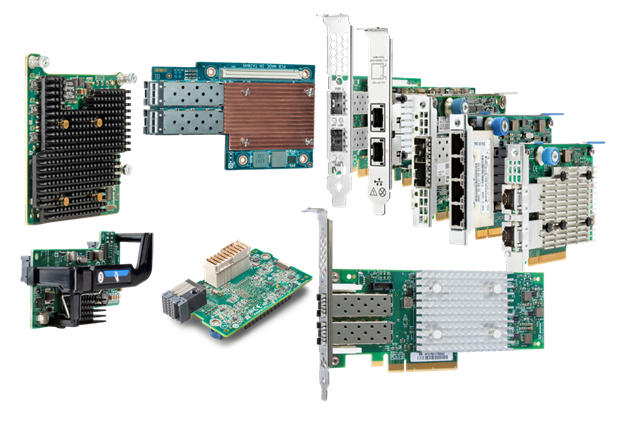

Our industry leading QLogic® 8/16/32Gb Fibre Channel and FastLinQ® 10/20/25/50Gb Ethernet I/O technology will continue to provide connectivity for HPE Server and Storage solutions. The focus for these products will continue to be the intelligent I/O of choice for HPE, with the performance, flexibility, and reliability we are known for.

Marvell’s Portfolio of FastLinQ Ethernet and QLogic Fibre Channel I/O Adapters

We will continue to provide ThunderX2® Arm® processor technology for HPC servers like the HPE Apollo 70 for high-performance compute applications. We will also continue to provide Ethernet networking technology that is embedded into HPE Servers and Storage today and Marvell ASIC technology used for the iLO5 baseboard management controller (BMC) in all HPE ProLiant and HPE Synergy Gen10 servers.

iLO 5 for HPE ProLiant Gen10 is deployed on Marvell SoCs

That sounds great, but what’s going to change over time?

The combined company now has a much broader portfolio of technology to help HPE deliver best-in-class solutions at the edge, in the network and in the data center.

Marvell has industry-leading switching technology from 1GbE to 100GbE and beyond. This enables us to deliver connectivity from the IoT edge, to the data center and the cloud. Our Intelligent NIC technology provides compression, encryption and more to enable customers to analyze network traffic faster and more intelligently than ever before. Our security solutions and enhanced SoC and Processor capabilities will help our HPE design-in team collaborate with HPE to innovate next-generation server and storage solutions.

Down the road, you’ll see a shift in our branding and where you access info over time as well. While our product-specific brands, like ThunderX2 for Arm, or QLogic for Fibre Channel and FastLinQ for Ethernet will remain, many things will transition from Cavium to Marvell. Our web-based resources will start to change as will our email addresses. For example, you can now access our HPE Microsite at www.marvell.com/hpe . Soon, you’ll be able to contact us at “hpesolutions@marvell.com” as well. The collateral you leverage today will be updated over time. In fact, this has already started with updates to our HPE-specific Line Card, our HPE Ethernet Quick Reference Guide, our Fibre Channel Quick Reference Guides and our presentation materials. Updates will continue over the next few months.

In summary, we are bigger and better. We are one team that is more focused than ever to help HPE, their partners and customers thrive with world-class technology we can bring to bear. If you want to learn more, engage with us today. Our field contact details are here. We are all excited for this new beginning to make “I/O and Infrastructure Matter!” each and every day.

-

May 02, 2018

Cavium FastLinQ Ethernet Adapters Available for HPE Cloudline Servers

By Todd Owens, Field Marketing Director, Marvell

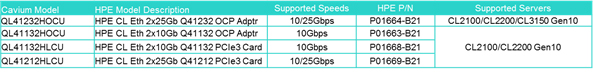

Are you considering deploying HPE Cloudline servers in your hyper-scale environment? If you are, be aware that HPE now offers select Cavium ™ FastLinQ® 10GbE and 10/25GbE Adapters as options for HPE Cloudline CL2100, CL2200 and CL3150 Gen 10 Servers. The adapters supported on the HPE Cloudline servers are shown in table 1 below.

Table 1: Cavium FastLinQ 10GbE and 10/25GbE Adapters for HPE Cloudline Servers

As today’s hyper-scale environments grow, the Ethernet I/O needs go well beyond providing basic L2 NIC connectivity. Faster processors, increase in scale, high performance NVMe and SSD storage and the need for better performance and lower latency have started to shift some of the performance bottlenecks from servers and storage to the network itself. That means architects of these environments need to rethink connectivity options.

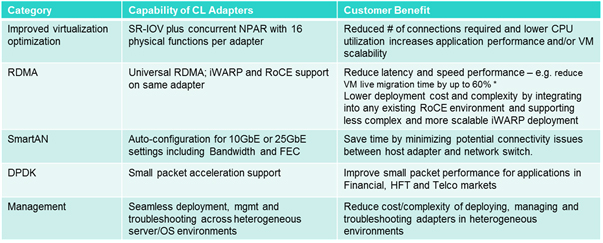

While HPE already have some good I/O offerings for Cloudline from other vendors, having Cavium FastLinQ adapters in the portfolio increases the I/O features and capabilities available. Advanced features like Universal RDMA, SmartAN™, DPDK, NPAR and SR-IOV from Cavium, allow architects to design more flexible and scalable hyper-scale environments.

Cavium’s advanced feature set provides offload technologies that shift the burden of managing the I/O from the O/S and CPU to the adapter itself. Some of the benefits of offloading I/O tasks include:

- Lower CPU utilization to free up resources for applications or more VM scalability

- Accelerate processing of small-packet I/O with DPDK

- Save time by automating adapter connectivity between 10GbE and 25GbE

- Reduced latency through direct memory access for I/O transactions to increase performance

- Network isolation and QoS at the VM level to improve VM application performance

- Reduce TCO with heterogeneous management

To deliver these benefits, customers can take advantage of some or all the advanced features in the Cavium FastLinQ Ethernet adapters for HPE Cloudline. Here’s a list of some of the technologies available in these adapters.

* Source; Demartek findings

Table 2: Advanced Features in Cavium FastLinQ Adapters for HPE CloudlineNetwork Partitioning (NPAR) virtualizes the physical port into eight virtual functions on the PCIe bus. This makes a dual port adapter appear to the host O/S as if it were eight individual NICs. Furthermore, the bandwidth of each virtual function can be fine-tuned in increments of 500Mbps, providing full Quality of Service on each connection. SR-IOV is an additional virtualization offload these adapters support that moves management of VM to VM traffic from the host hypervisor to the adapter. This frees up CPU resources and reduces VM to VM latency.

Remote Direct Memory Access (RDMA) is an offload that routes I/O traffic directly from the adapter to the host memory. This bypasses the O/S kernel and can improve performance by reducing latency. The Cavium adapters support what is called Universal RDMA, which is the ability to support both RoCEv2 and iWARP protocols concurrently. This provides network administrators more flexibility and choice for low latency solutions built with HPE Cloudline servers.

SmartAN is a Cavium technology available on the 10/25GbE adapters that addresses issues related to bandwidth matching and the need for Forward Error Correction (FEC) when switching between 10Gbe and 25GbE connections. For 25GbE connections, either Reed Solomon FEC (RS-FEC) or Fire Code FEC (FC-FEC) is required to correct bit errors that occur at higher bandwidths. For the details behind SmartAN technology you can refer to the Marvell technology brief here.

Support for Data Plane Developer Kit (DPDK) offloads accelerate the processing of small packet I/O transmissions. This is especially important for applications in the Telco NFV and high-frequency trading environments.

For simplified management, Cavium provides a suite of utilities that allow for configuration and monitoring of the adapters that work across all the popular O/S environments including Microsoft Windows Server, VMware and Linux. Cavium’s unified management suite includes QCC GUI, CLI and v-Center plugins, as well as PowerShell Cmdlets for scripting configuration commands across multiple servers. Cavum’s unified management utilities can be downloaded from www.cavium.com .

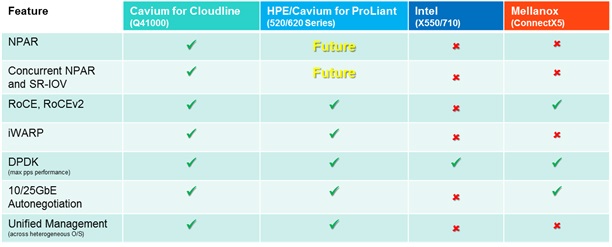

Gen10 servers. Each of the Cavium adapters shown in table 1 support all of the capabilities noted above and are available in standup PCIe or OCP 2.0 form factors for use in the HPE Cloudline Gen10 Servers. One question you may have is how do these adapters compare to other offerings for Cloudline and those offered in HPE ProLiant servers? For that, we can look at the comparison chart here in table 3.

Table 3: Comparison of I/O Features by Ethernet Supplier

Given that Cloudline is targeted for hyper-scale service provider customers with large and complex networks, the Cavium FastLinQ Ethernet adapters for HPE Cloudline offer administrators much more capability and flexibility than other I/O offerings. If you are considering HPE Cloudline servers, then you should also consider Cavium FastLinQ as your I/O of choice.

-

April 05, 2018

VMware vSAN ReadyNode Recipes Can Use Substitutions

By Todd Owens, Field Marketing Director, Marvell

VMware vSAN ReadyNode Recipes Can Use Substitutions When you are baking a cake, at times you substitute in different ingredients to make the result better. The same can be done with VMware vSAN ReadyNode configurations or recipes. Some changes to the documented configurations can make the end solution much more flexible and scalable. VMware allows certain elements within a vSAN ReadyNode bill of materials (BOM) to be substituted. In this VMware BLOG, the author outlines that server elements in the bom can change including:- CPU

- Memory

- Caching Tier

- Capacity Tier

- NIC

- Boot Device

Ok, so we know what substitutions we can make in these vSAN storage solutions. What are the benefits to the customer for making this change?

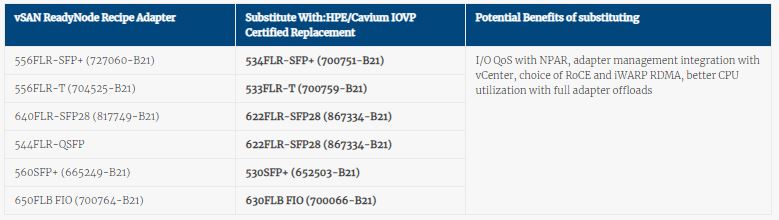

There are several benefits to the HPE/Cavium technology compared to the other adapter offerings.

Ok, so we know what substitutions we can make in these vSAN storage solutions. What are the benefits to the customer for making this change?

There are several benefits to the HPE/Cavium technology compared to the other adapter offerings.

- HPE 520/620 Series adapters support Universal RDMA – the ability to support both RoCE and IWARP RDMA protocols with the same adapter.

- Why Does This Matter? Universal RDMA offers flexibility in choice when low-latency is a requirement. RoCE works great if customers have already deployed using lossless Ethernet infrastructure. iWARP is a great choice for greenfield environments as it works on existing networks, doesn’t require complexity of lossless Ethernet and thus scales infinitely better.

- Concurrent Network Partitioning (NPAR) and SR-IOV

- NPAR (Network Partitioning) allows for virtualization of the physical adapter port. SR-IOV Offloadmove management of the VM network from the Hypervisor (CPU) to the Adapter. With HPE/Cavium adapters, these two technologies can work together to optimize the connectivity for virtual server environments and offload the Hypervisor (and thus CPU) from managing VM traffic, while providing full Quality of Service at the same time.

- Storage Offload

- Ability to reduce CPU utilization by offering iSCSI or FCoE Storage offload on the adapter itself. The freed-up CPU resources can then be used for other, more critical tasks and applications. This also reduces the need for dedicated storage adapters, connectivity hardware and switches, lowering overall TCO for storage connectivity.

- Offloads in general – In addition to RDMA, Storage and SR-IOV Offloads mentioned above, HPE/Cavium Ethernet adapters also support TCP/IP Stateless Offloads and DPDK small packet acceleration offloads as well. Each of these offloads moves work from the CPU to the adapter, reducing the CPU utilization associated with I/O activity. As mentioned in my previous blog, because these offloads bypass tasks in the O/S Kernel, they also mitigate any performance issues associated with Spectre/Meltdown vulnerability fixes on X86 systems.

- Adapter Management integration with vCenter – All HPE/Cavium Ethernet adapters are managed by Cavium’s QCC utility which can be fully integrated into VMware v-Center. This provides a much simpler approach to I/O management in vSAN configurations.

-

February 20, 2018

If You're Not Using Intelligent I/O Adapters, You Should Be!

By Todd Owens, Field Marketing Director, Marvell

Like a kid in a candy store, choose I/O wisely.

Remember as a child, a quick stop to the convenience store, standing in front of the candy aisle your parents saying, “hurry and pick one.” But with so many choices, the decision was often confusing. With time running out, you’d usually just grab the name-brand candy you were familiar with. But what were you missing out on? Perhaps now you realize there were more delicious or healthy offerings you could have chosen.

I use this as an analogy to discuss the choice of I/O technology for use in server configurations. There are lots of choices and it takes time to understand all the differences. As a result, system architects in many cases just fall back to the legacy name-brand adapter they have become familiar with. Is this the best option for their client though? Not always. Here’s some reasons why.

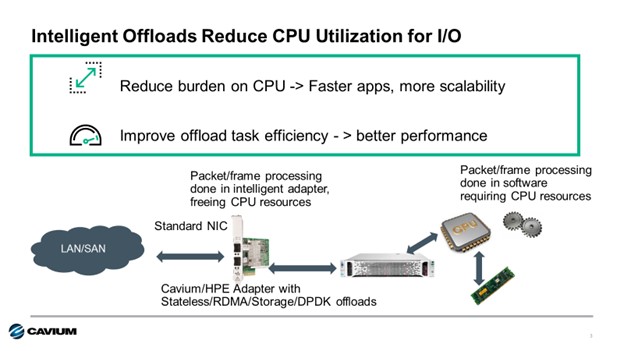

Some of today’s Ethernet adapters provide added capabilities that I refer to as “Intelligent I/O”. These adapters utilize a variety of offload technology and other capabilities to take on tasks associated with I/O processing that are typically done in software by the CPU when using a basic “standard” Ethernet adapter. Intelligent offloads include things like SR-IOV, RDMA, iSCSI, FCoE or DPDK. Each of these offloads the work to the adapter and, in many cases, bypasses the O/S kernel, speeding up I/O transactions and increasing performance.

As servers become more powerful and get packed with more virtual machines, running more applications, CPU utilizations of 70-80% are now commonplace. By using adapters with intelligent offloads, CPU utilization for I/O transactions can be reduced significantly, giving server administrators more CPU headroom. This means more CPU resources for applications or to increase the VM density per server.

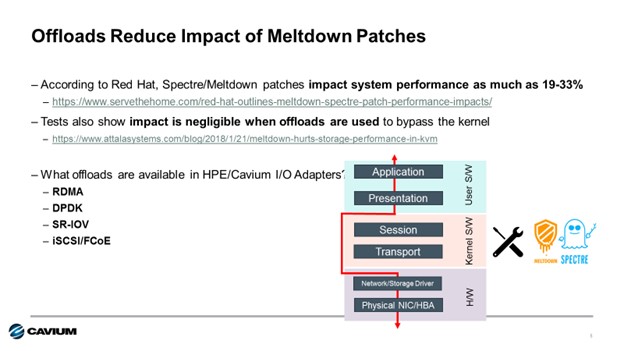

Another reason is to mitigate performance impact to the Spectre and Meltdown fixes required now for X86 server processors. The side channel vulnerability known as Spectre and Meltdown in X86 processors required kernel patches from the CPU vendor. These patches can have a significantly reduce CPU performance. For example, Red Hat reported the impact could be as much as a 19% performance degradation. That’s a big performance hit.

Storage offloads and offloads like SR-IOV, RDMA and DPDK all bypass the O/S kernel. Because they bypass the kernel, the performance impacts of the Spectre and Meltdown fixes are bypassed as well. This means I/O transactions with intelligent I/O adapters are not impacted by these fixes, and I/O performance is maximized.

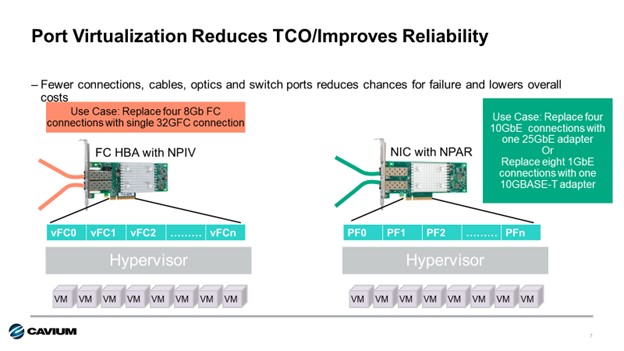

Finally, intelligent I/O can play a role in reducing cost and complexity and optimizing performance in virtual server environments. Some intelligent I/O adapters have port virtualization capabilities. Cavium Fibre Channel HBAs implement N-port ID Virtualization, or NPIV, to allow a single Fibre Channel port appear as multiple virtual Fibre Channel adapters to the hypervisor. For Cavium FastLinQ Ethernet Adapters, Network Partitioning, or NPAR, is utilized to provide similar capability for Ethernet connections. Up to eight independent connections can be presented to the host O/S making a single dual-port adapter look like 16 NICs to the operating system. Each virtual connection can be set to specific bandwidth and priority settings, providing full quality of service per connection.

The advantage of this port virtualization capability is two-fold. First, the number of cables and connections to a server can be reduced. In the case of storage, four 8Gb Fibre Channel connections can be replaced by a single 32Gb Fibre Channel connection. For Ethernet, eight 1GbE connections can easily be replaced by a single 10GbE connection and two 10GbE connections can be replaced with a single 25GbE connection, with 20% additional bandwidth to spare.

At HPE, there are more than fifty 10Gb-100GbE Ethernet adapters to choose from across the HPE ProLiant, Apollo, BladeSystem and HPE Synergy server portfolios. That’s a lot of documentation to read and compare. Cavium is proud to be a supplier of eighteen of these Ethernet adapters, and we’ve created a handy quick reference guide to highlight which of these offloads and virtualization features are supported on which adapters. View the complete Cavium HPE Ethernet Adapter Quick Reference guide here.

For Fibre Channel HBAs, there are fewer choices (only nineteen), but we make a quick reference document available for our HBA offerings at HPE as well. You can view the Fibre Channel HBA Quick Reference here.

In summary, when configuring HPE servers, think twice before selecting your I/O device. Choose an Intelligent I/O Adapter like those from HPE and Cavium. Cavium provides the broadest portfolio of intelligent Ethernet and Fibre Channel adapters for HPE Gen9 and Gen10 Servers and they support most, if not all, of the features mentioned in this blog. The best news is that the HPE/Cavium adapters are offered at the same or lower price than other products with fewer features. That means with HPE and Cavium I/O, you get more for less, and it just works too!

Recent Posts

- HashiCorp and Marvell: Teaming Up for Multi-Cloud Security Management

- Cryptomathic and Marvell: Enhancing Crypto Agility for the Cloud

- The Big, Hidden Problem with Encryption and How to Solve It

- Self-Destructing Encryption Keys and Static and Dynamic Entropy in One Chip

- Dual Use IP: Shortening Government Development Cycles from Two Years to Six Months