By Nicola Bramante, Senior Principal Engineer, Connectivity Marketing, Marvell

The exponential growth in AI workloads drives new requirements for connectivity in terms of data rate, associated bandwidth and distance, especially for scale-up applications. With direct attach copper (DAC) cables reaching their limits in terms of bandwidth and distance, a new class of cables, active copper cables (ACCs), are coming to market for short-reach links within a data center rack and between racks. Designed for connections up to 2 to 2.5 meters long, ACCs can transmit signals further than traditional passive DAC cables in the 200G/lane fabrics hyperscalers will soon deploy in their rack infrastructures.

At the same time, a 1.6T ACC consumes a relatively miniscule 2.5 watts of power and can be built around fewer and less sophisticated components than longer active electrical cables (AECs) or active optical cables (AOCs). The combination of features gives ACCs a peak mix of bandwidth, power, and cost for server-to-server or server-to-switch connections within the same rack.

Marvell announced its first ACC linear equalizers for producing ACC cables last month.

Inside the Cable

ACCs effectively integrate technology originally developed for the optical realm into copper cables. The idea is to use optical technologies to extend bandwidth, distance and performance while taking advantage of copper’s economics and reliability. Where these ACCs differ is in the components added to them and the way they leverage the technological capabilities of a switch or other device to which they are connected.

ACCs include an equalizer that boosts signals received from the opposite end of the connection. As analog devices, ACC equalizers are relatively inexpensive compared to digital alternatives, consume minimal power and add very little latency.

By Winnie Wu, Senior Director Product Marketing at Marvell

Welcome to the beginning of row-scale computing.

At the 2025 OCP Global Summit, Marvell and Infraeo will showcase a breakthrough in high-speed interconnect technology — a 9-meter active electrical cable (AEC) capable of transmitting 800G across standard copper. The demonstration will take place in the Marvell booth #B1.

This latest innovation brings data center architecture one step closer to full row-scale AI system design, allowing copper connections that stretch across seven racks - that’s nearly the length of a standard 10-rack row. It builds on the prior achievement by Marvell of a 7-meter AEC demonstrated at OFC 2025, pushing high-speed copper technology even further beyond what was thought possible.

Pushing the Boundaries of Copper

Until now, copper connections in large-scale AI systems have been limited by reach. Traditional electrical cables lose signal quality as distance increases, restricting system architects to a few meters between servers or racks. The 9-meter AEC changes that equation.

By combining high-performance digital signal processing (DSP) with advanced noise reduction and signal integrity engineering, the new design extends copper’s effective range well beyond conventional limits, maintaining clean, low-latency data transfer over distances once thought achievable only with optical fiber.

By Annie Liao, Product Management Director, ODSP Marketing, Marvell

For over 20 years, PCIe, or Peripheral Component Interconnect Express, has been the dominant standard to connect processors, NICs, drives and other components within servers thanks to the low latency and high bandwidth of the protocol as well as the growing expertise around PCIe across the technology ecosystem. It will also play a leading role in defining the next generation of computing systems for AI through increases in performance and combining PCIe with optics.

Here’s why:

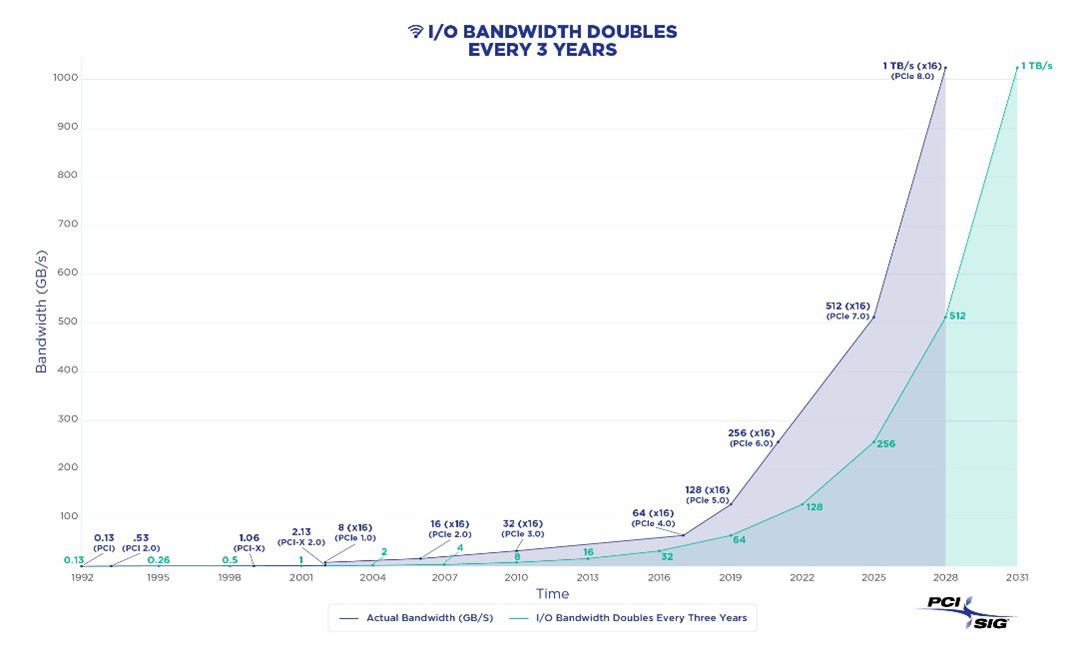

PCIe Transitions Are Accelerating

Seven years passed between the debut of PCIe Gen 3 (8 gigatransfers/second—GT/s) in 2010 and the release of PCIe Gen 4 (16 GT/sec) in 2017.1 Commercial adoption, meanwhile, took closer to a full decade2

Toward a terabit (per second): PCIe standards are being developed and adopted at a faster rate to keep up with the chip-to-chip interconnect speeds needed by system designers.

By Nicola Bramante, Senior Principal Engineer

Transimpedance amplifiers (TIAs) are one of the unsung heroes of the cloud and AI era.

At the recent OFC 2025 event in San Francisco, exhibitors demonstrated the latest progress on 1.6T optical modules featuring Marvell 200G TIAs. Recognized by multiple hyperscalers for its superior performance, Marvell 200G TIAs are becoming a standard component in 200G/lane optical modules for 1.6T deployments.

TIAs capture incoming optical signals from light detectors and transform the underlying data to be transmitted between and used by servers and processors in data centers and scale-up and scale-out networks. Put another way, TIAs allow data to travel from photons to electrons. TIAs also amplify the signals for optical digital signal processors, which filter out noise and preserve signal integrity.

And they are pervasive. Virtually every data link inside a data center longer than three meters includes an optical module (and hence a TIA) at each end. TIAs are critical components of fully retimed optics (FRO), transmit retimed optics (TRO) and linear pluggable optics (LPO), enabling scale-up servers with hundreds of XPUs, active optical cables (AOC), and other emerging technologies, including co-packaged optics (CPO), where TIAs are integrated into optical engines that can sit on the same substrates where switch or XPU ASICs are mounted. TIAs are also essential for long-distance ZR/ZR+ interconnects, which have become the leading solution for connecting data centers and telecom infrastructure. Overall, TIAs are a must have component for any optical interconnect solution and the market for interconnects is expected to triple to $11.5 billion by 2030, according to LightCounting.

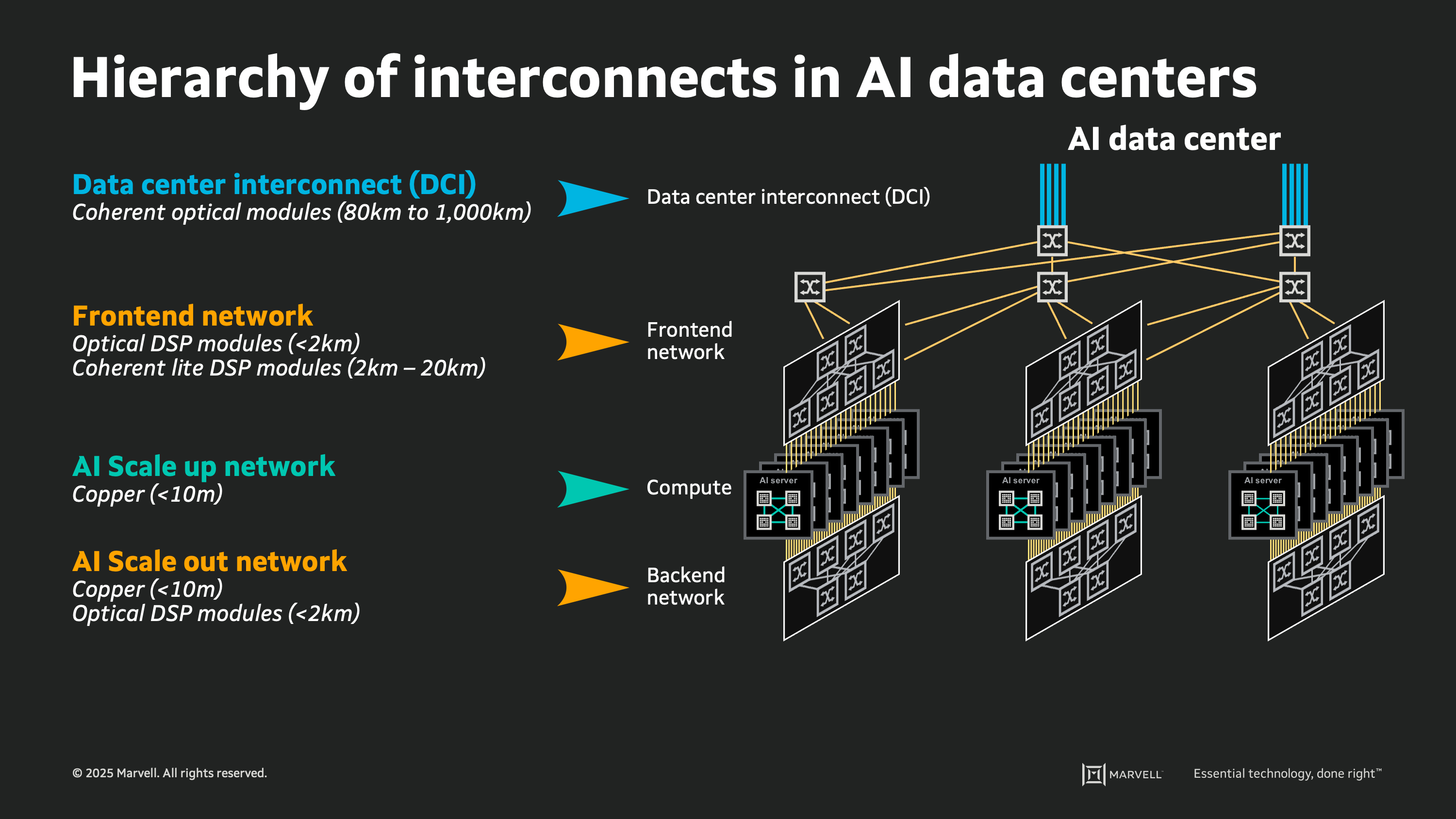

The rapid expansion in the size and capacity of AI workloads is significantly impacting both computing and network technologies in the modern data center. Data centers are continuously evolving to accommodate higher performance GPUs and AI accelerators (XPUs), increased memory capacities, and a push towards lower latency architectures for arranging these elements. The desire for larger clusters with shorter compute times has driven heightened focus on networking interconnects, with designers embracing state-of-the-art technologies to ensure efficient data movement and communication between the components comprising the AI cloud.

A large-scale AI cloud data center can contain hundreds of thousands, or millions, of individual links between the devices performing compute, switching, and storage functions. Inside the cloud, there is a tightly ordered fabric of high-speed interconnects: webs of copper wire and glass fiber each carrying digital signals at roughly 100 billion bits per second. Upon close inspection there is a pattern and a logical ordering to each link used for every connection in the cloud, which can be analyzed by considering the physical attributes of different types of links.

For inspiration, we can look back 80 years to the origins of modern computing, when John von Neumann posed the concept of memory hierarchy for computer architectures1. In 1945 Von Neumann proposed a smaller faster storage memory placed close to the compute circuitry, and a larger slower storage medium placed further away, to enable a system delivering both performance and scale. This concept of memory hierarchy is now pervasive, with the terms “Cache”, “DRAM”, and “Flash” part of our everyday language. In today’s AI cloud data centers, we can analyze the hierarchy of interconnects in much the same way. It is a layered structure of links, strategically utilized according to their innate physical attributes of speed, power consumption, reach, and cost.

The hierarchy of interconnects

This hierarchy of interconnects provides a framework for understanding emerging interconnect technologies and to assess their potential impact in the next generation of AI data centers. Through a discussion of the basic attributes of emerging interconnect technologies in the context of the goals and aims of the AI cloud design, we can estimate how these technologies may be deployed in the coming years. By identifying the desired attributes for each use case, and the key design constraints, we can also predict when new technologies will pass the "tipping point" enabling widespread adoption in future cloud deployments.