By Kirt Zimmer, Head of Social Media Marketing, Marvell

The OFC 2025 event in San Francisco was so vast that it would be easy to miss a few stellar demos from your favorite optical networking companies. That’s why we took the time to create videos featuring the latest Marvell technology.

Put them all together and you have a wonderful film festival for technophiles. Enjoy!

We spoke with Kishore Atreya, Senior Director of Cloud Platform Marketing at Marvell, who discussed co-packaged optics. Instead of moving data via electrons, a light engine converts electrical signals into photons—unlocking ultra-high-speed, low-power optical data transfer.

The 1.6T and 6.4T light engines from Marvell can be integrated directly into the chip package, minimizing trace lengths, reducing power and enabling true plug-and-play fiber connectivity. It is flexible, scalable, and built for switching, XPUs, and beyond.

By Nicola Bramante, Senior Principal Engineer

Transimpedance amplifiers (TIAs) are one of the unsung heroes of the cloud and AI era.

At the recent OFC 2025 event in San Francisco, exhibitors demonstrated the latest progress on 1.6T optical modules featuring Marvell 200G TIAs. Recognized by multiple hyperscalers for its superior performance, Marvell 200G TIAs are becoming a standard component in 200G/lane optical modules for 1.6T deployments.

TIAs capture incoming optical signals from light detectors and transform the underlying data to be transmitted between and used by servers and processors in data centers and scale-up and scale-out networks. Put another way, TIAs allow data to travel from photons to electrons. TIAs also amplify the signals for optical digital signal processors, which filter out noise and preserve signal integrity.

And they are pervasive. Virtually every data link inside a data center longer than three meters includes an optical module (and hence a TIA) at each end. TIAs are critical components of fully retimed optics (FRO), transmit retimed optics (TRO) and linear pluggable optics (LPO), enabling scale-up servers with hundreds of XPUs, active optical cables (AOC), and other emerging technologies, including co-packaged optics (CPO), where TIAs are integrated into optical engines that can sit on the same substrates where switch or XPU ASICs are mounted. TIAs are also essential for long-distance ZR/ZR+ interconnects, which have become the leading solution for connecting data centers and telecom infrastructure. Overall, TIAs are a must have component for any optical interconnect solution and the market for interconnects is expected to triple to $11.5 billion by 2030, according to LightCounting.

By Kirt Zimmer, Head of Social Media Marketing

Even in the very practical world of engineering, heart-warming stories can inspire. A perfect example of this has just transpired.

Dr. Radha Nagarajan has been elected to the National Academy of Engineering. He serves as Marvell’s Senior Vice President and Chief Technology Officer, Optical Engineering, in the Datacenter Engineering Group.

Election to the National Academy of Engineering is among the highest professional distinctions accorded to an engineer, essentially akin to making it into the Hall of Fame of Engineering if such a thing existed. NAE membership honors those who have made outstanding contributions to "engineering research, practice, or education.” NAE members - who number more than 2,600 - are highly accomplished engineering professionals representing a broad spectrum of engineering disciplines working in business, academia, and government.

By Michael Kanellos, Head of Influencer Relations, Marvell

Data infrastructure needs more: more capacity, speed, efficiency, bandwidth and, ultimately, more data centers. The number of data centers owned by the top four cloud operators has grown by 73% since 20201, while total worldwide data center capacity is expected to double to 79 megawatts (MW) in the near future2.

Aquila, the industry’s first O-band coherent DSP, marks a new chapter in optical technology. O-band optics lower the power consumption and complexity of optical modules for links ranging from two to 20 kilometers. O-band modules are longer in reach than PAM4-based optical modules used inside data centers and shorter than C-band and L-band coherent modules. They provide users with an optimized solution for the growing number of data center campuses emerging to manage the expected AI data traffic.

Take a deep dive into our O-band technology with Xi Wang’s blog, O-Band Coherent, An Idea Whose Time is (Nearly) Here, originally published in March, below:

O-Band Coherent: An Idea Whose Time Is (Nearly) Here

By Xi Wang, Vice President of Product Marketing of Optical Connectivity, Marvell

Over the last 20 years, data rates for optical technology have climbed 1000x while power per bit has declined by 100x, a stunning trajectory that in many ways paved the way for the cloud, mobile Internet and streaming media.

AI represents the next inflection point in bandwidth demand. Servers powered by AI accelerators and GPUs have far greater bandwidth needs than typical cloud servers: seven high-end GPUs alone can max out a switch that ordinarily can handle 500 cloud two-processor servers. Just as important, demand for AI services, and higher-value AI services such as medical imaging or predictive maintenance, will further drive the need for more bandwidth. The AI market alone is expected to reach $407 billion by 2027.

By Michael Kanellos, Head of Influencer Relations, Marvell and Vienna Alexander, Marketing Content Intern, Marvell

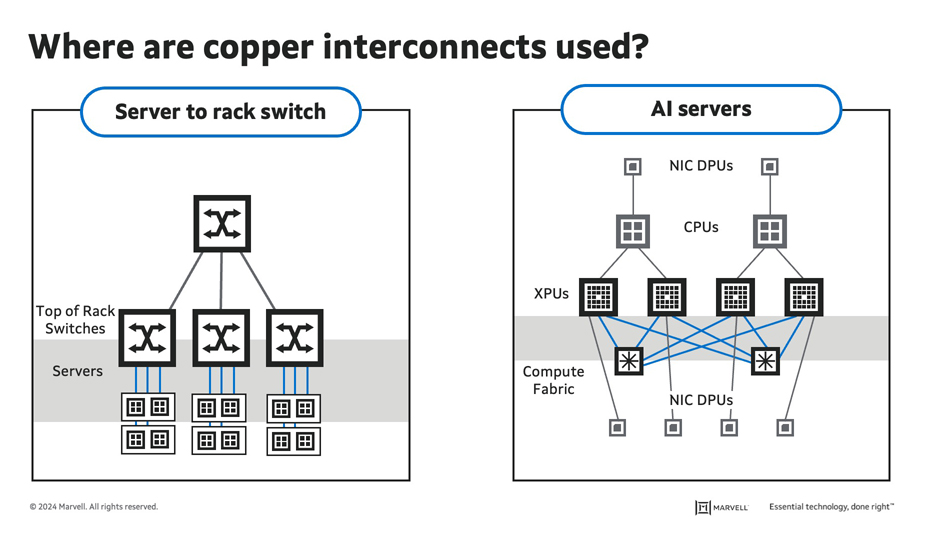

Is copper dead?

Not by a long shot. Copper technology, however, will undergo a dramatic transformation over the next several years. Here’s a guide.

1. Copper is the Goldilocks Metal

Copper has been a staple ingredient for interconnects since the days of Colossus and ENIAC. It is a superior conductor, costs far less than gold or silver and offers relatively low resistance. Copper also replaced aluminum for connecting transistors inside of chips in the late 90s because its 40% lower resistance improved performance by 15%1.

Copper is also simple, reliable and hearty. Interconnects are essentially wires. By contrast, optical interconnects require a host of components such as optical DSPs, transimpedance amplifiers and lasers.

“The first rule in optical technology is ‘Whatever you can do in copper, do in copper,’” says Dr. Loi Nguyen, EVP of optical technology at Marvell.

2. But It’s Still a Metal

Nonetheless, electrical resistance exists. As bandwidth and network speeds increase, so do heat and power consumption. Additionally, increasing bandwidth reduces the reach, so doubling the data rate reduces distance by roughly 30–50% (see below).

As a result, optical technologies have replaced copper in interconnects five meters or longer in data centers and telecommunication networks.

Source: Marvell