- PRODUCTS

- COMPANY

- SUPPORT

- PRODUCTS

- BY TYPE

- BY MARKET

- COMPANY

- SUPPORT

Auto-Load Balancing and Teralynx 10: Optimizing Cloud and AI Infrastructure

Milliseconds matter.

It’s one of the fundamental laws of AI and cloud computing. Reducing the time required to run an individual workload frees up infrastructure to perform more work, which in turn creates an opportunity for cloud operators to potentially generate more revenue. Because they perform billions of simultaneous operations and operate on a 24/7/365 basis, time literally is money to cloud operators.

Marvell specifically designed the Marvell® Teralynx® 10 switch to optimize infrastructure for the intense performance demands of the cloud and AI era. Benchmark tests show that Teralynx 10 operates at a low and predictable 500 nanoseconds, a critical precursor for reducing time-to-completion.1 The 512-radix design of Teralynx 10 also means that large clusters or data centers with networks built around the device (versus 256-radix switch silicon) need up to 40% fewer switches, 33% fewer networking layers and 40% fewer connections to provide an equivalent level of aggregate bandwidth.2 Less equipment, of course, paves the way for lower costs, lower energy and better use of real estate.

Recently, we also teamed up with Keysight to provide deeper detail on another crucial feature of critical importance: auto-load balancing (ALB), or the ability of Teralynx 10 to even out traffic between ports based on current and anticipated loads. Like a highway system, spreading traffic more evenly across lanes in networks prevents congestion and reduces cumulative travel time. Without it, a crisis in one location becomes a problem for the entire system.

Better Load Balancing, Better Traffic Flow

To test our hypothesis of utilizing smarter load balancing for better load distribution, we created a scenario with Keysight AI Data Center Builder (KAI DC Builder) to measure port utilization and job completion time across different AI collective workloads. Built around a spine-leaf topology with four nodes, KAI DC Builder supports a range of collective algorithms, including all-to-all, all-reduce, all-gather, reduce-scatter, and gather. It facilitates the generation of RDMA traffic and operates using the RoCEv2 protocol. (In lay person’s terms, KAI DC Builder along with Keysight’s AresONE-M 800GE hardware platform enabled us to create a spectrum of test tracks.)

For generating AI traffic workloads, we used the Keysight Collective Communication Benchmark (KCCB) application. This application is installed as a container on the server, along with the Keysight provided supportive dockers..

In our tests, Keysight AresONE-M 800GE was connected to a Teralynx 10 Top-of-Rack switch via 16 400G OSFP ports. The ToR switch in turn was linked to a Teralynx 10 system configured as a leaf switch. We then measured port utilization and time-of-completion. All Teralynx 10 systems were loaded with SONiC.

The Tests

We measured the Teralynx 10 performance by running common collective library (CCL) benchmarks and ran the tests with auto-load balancing off and on, comparing the results. In short, a rapid ramp is better than a gradual one: it indicates congestion issues were resolved in less time.

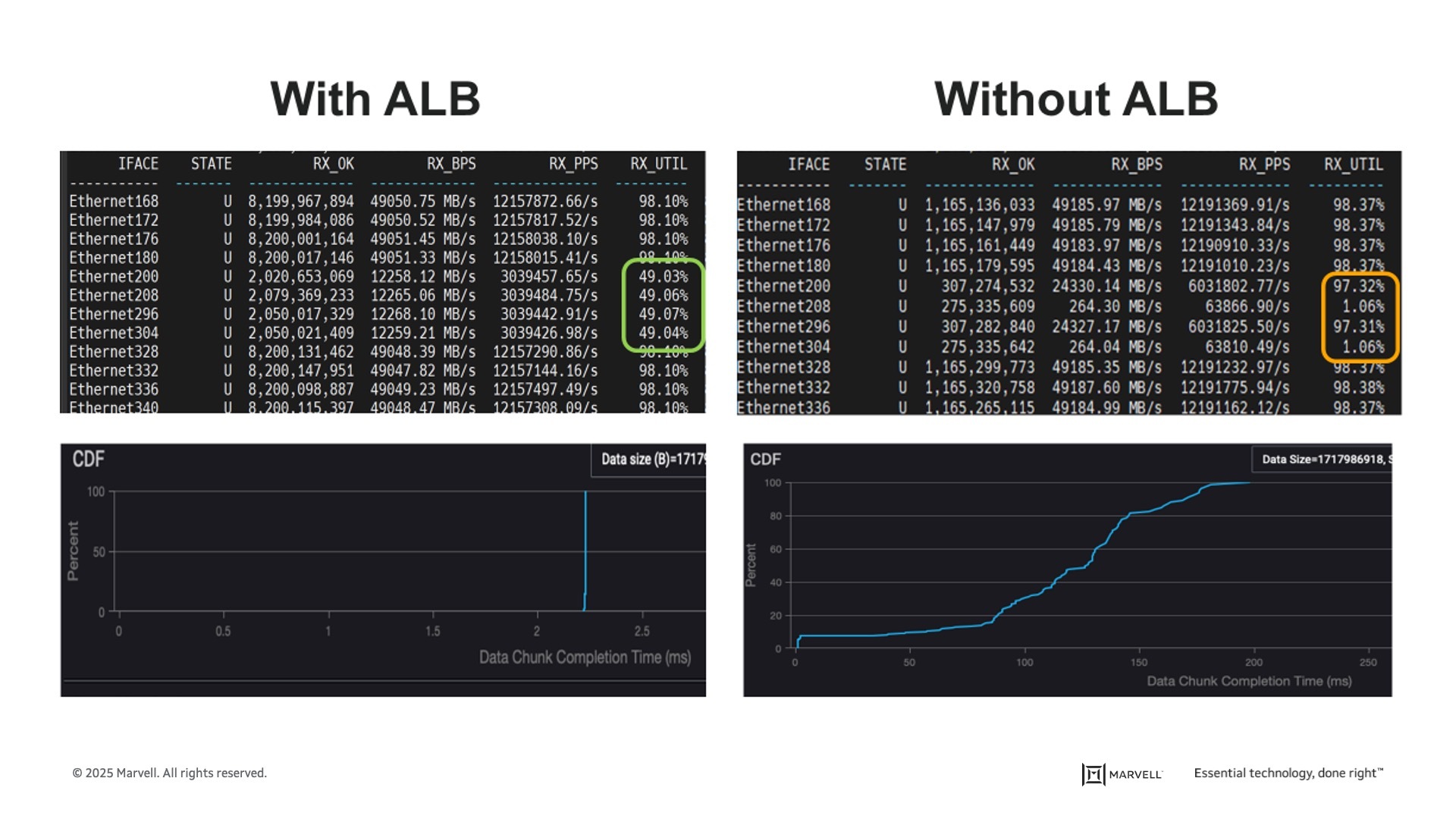

The top two diagrams shown below measure port utilization. With ALB, port utilization was just under 50% per port with variations between them measuring in the hundredths of a percent. (green rectangle.) The high and low water marks were represented by 49.07% and 49.03%, respectively.

Without ALB, port utilization was substantially less balanced from a clogged 97.32% to 1.06%. (orange rectangle.)

The impact of this is shown in the lower two screen shots below. With ALB, the workload was completed in approximately 2.25 milliseconds. Without ALB, congestion occurred right from the start. Completing 20% of the workload required 100 milliseconds. Progress then accelerated but began to plateau again at the 80% mark. The workload wasn’t complete until 200 milliseconds.3

With auto-load balancing switched on, Teralynx 10 switches showed a more consistent signal and data chunk completion time. Source: Marvell and Keysight.

The conclusion: ALB can shoulder a crucial role in keeping infrastructure operating the way it should. Click here to learn more about Marvell’s Teralynx 10 data center Ethernet switch, now available with auto-load balancing.

1. Marvell, July 2024.

2. Marvell Teralynx 10 press and analyst deck, July 2024.

3. Performance tests conducted by Marvell and Keysight.

Kishore Atreya is Senior Director of Cloud Platform Marketing at Marvell.

# # #

This blog contains forward-looking statements within the meaning of the federal securities laws that involve risks and uncertainties. Forward-looking statements include, without limitation, any statement that may predict, forecast, indicate or imply future events or achievements. Actual events or results may differ materially from those contemplated in this blog. Forward-looking statements are only predictions and are subject to risks, uncertainties and assumptions that are difficult to predict, including those described in the “Risk Factors” section of our Annual Reports on Form 10-K, Quarterly Reports on Form 10-Q and other documents filed by us from time to time with the SEC. Forward-looking statements speak only as of the date they are made. Readers are cautioned not to put undue reliance on forward-looking statements, and no person assumes any obligation to update or revise any such forward-looking statements, whether as a result of new information, future events or otherwise.

Tags: data centers, Cloud and Data Infrastructure, sonic, teralynx

Recent Posts

- The Golden Cable Initiative: Enabling the Cable Partner Ecosystem at Hyperscale Speed

- Marvell Named to America’s Best Midsize Employers 2026 Ranking

- Ripple Effects: Why Water Risk Is the Next Major Business Challenge for the Semiconductor Industry

- Boosting AI with CXL Part III: Faster Time-to-First-Token

- Marvell Wins Interconnect Product of the Year for Ara 3nm 1.6T PAM4 DSP

Archives

Categories

- 5G (10)

- AI (52)

- Cloud (24)

- Coherent DSP (12)

- Company News (108)

- Custom Silicon Solutions (11)

- Data Center (77)

- Data Processing Units (21)

- Enterprise (24)

- ESG (12)

- Ethernet Adapters and Controllers (11)

- Ethernet PHYs (3)

- Ethernet Switching (41)

- Fibre Channel (10)

- Marvell Government Solutions (2)

- Networking (46)

- Optical Modules (20)

- Security (6)

- Server Connectivity (37)

- SSD Controllers (6)

- Storage (23)

- Storage Accelerators (4)

- What Makes Marvell (48)

Copyright © 2026 Marvell, All rights reserved.

- Terms of Use

- Privacy Policy

- Contact