- PRODUCTS

- COMPANY

- SUPPORT

- PRODUCTS

- BY TYPE

- BY MARKET

- COMPANY

- SUPPORT

Canada’s Role in the AI Revolution

This blog first appeared in The Future Economy

AI has the potential to transform the way we live. But for AI to become sustainable and pervasive, we also have to transform our computing infrastructure.

The world’s existing technologies, simply put, weren’t designed for the data-intensive, highly parallel computing problems that AI serves up. As a result, AI clusters and data centers aren’t nearly as efficient or elegant as they could be: in many ways, it’s brute force computing. Power1 and water2 consumption in data centers are growing dramatically and many communities around the world are pushing back on plans to expand data infrastructure.3

Canada can and will play a leading role in overcoming these hurdles. Data center expansion is already underway. Data centers currently account for around 1GW, or 1%, of Canada’s electricity capacity. If all of the projects in review today get approved, that total could grow to 15GW, or enough to power 70% of the homes in the country.4

Like in other regions, data center operators are exploring ways to increase their use of renewables and nuclear in these new facilities along with ambient cooling to reduce their carbon footprint of their facilities. In Alberta, some companies are also exploring adding carbon capture to the design of data centers powered by natural gas. To date, carbon capture has not lived up to its promise.5 Most carbon capture experiments, however, have been coupled with large-scale industrial plants. It may be worth examining if carbon capture—combined with mineralization for long-term storage—can work on this smaller scale. If it does, the technology could be exported to other regions.

Fixing facilities, however, is only part of the equation. AI requires a fundamental overhaul in the systems and components that make up our networks.

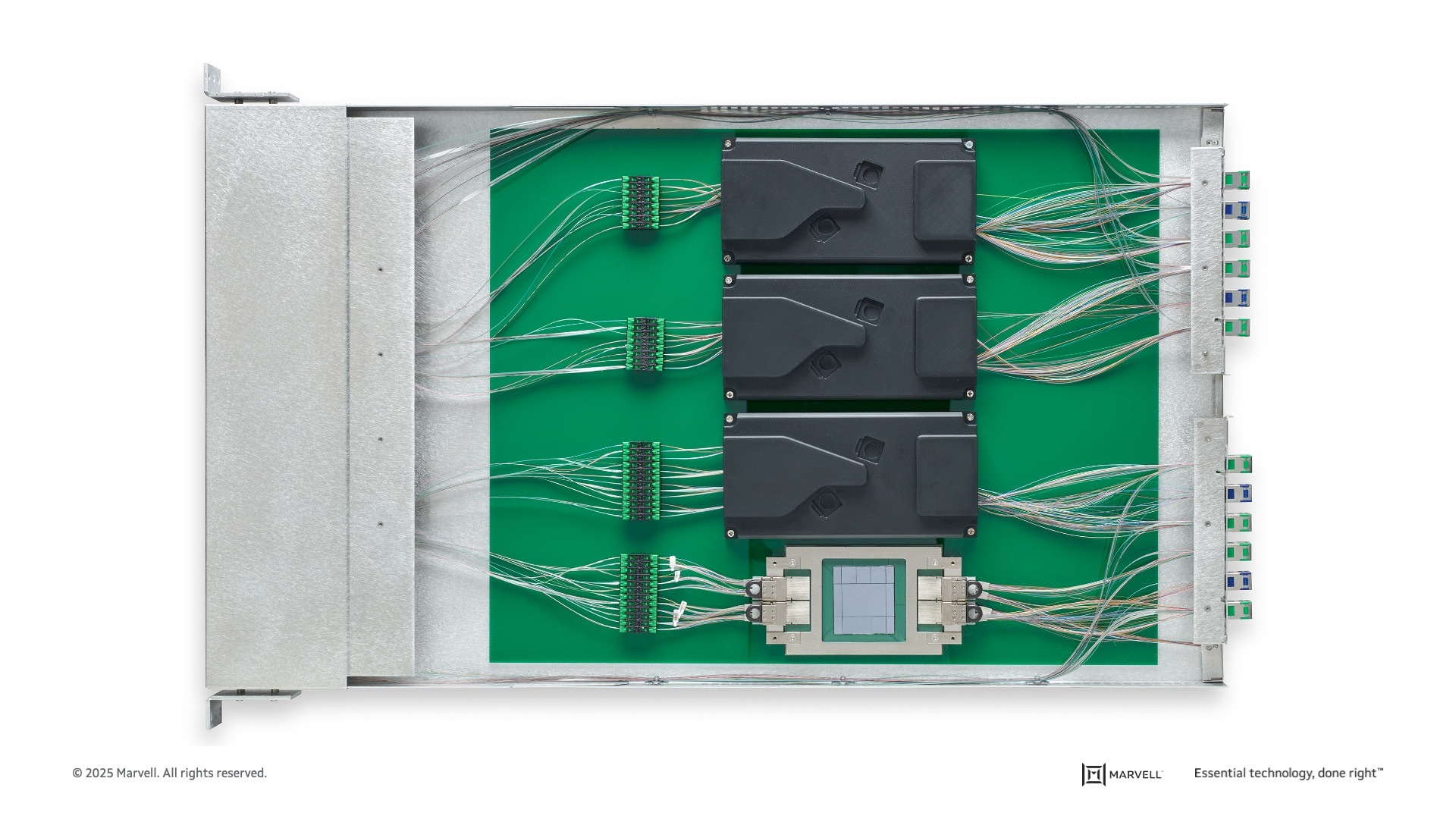

Above: The server of the future. The four AI processors connect to networks through four 6.4T light engines, the four smaller chips on the east-west side of the exposed processor. Coupling optical technology with the processor lowers power per bit while increasing bandwidth.

Consider the fiber optical interconnects that link together large AI clusters. Twenty-five years ago, interconnects could send 100 megabits of data per second. Today, they can deliver over 1 terabit per second, or 10,000 times faster than cutting edge speeds two decades ago and future, cutting-edge clusters will require millions of them. Meanwhile, power per bit has declined by 1000x over this period.6

The problem? them.

For the rest of the story, please go to The Future Economy.

Nizar Rida, Vice President of Engineering and Country Manager for Marvell Canada

1. US Dept. of Energy, Dec 2024.

2. UC Riverside and UT Arlington, Oct 2023.

3. Radha Nagarajan, Data Center Knowledge, Sept 2023.

4. RBC Climate Report, December 2024.

5. Scientific American March 2024.

6. Photonics Spectra, March 2024.

# # #

This blog contains forward-looking statements within the meaning of the federal securities laws that involve risks and uncertainties. Forward-looking statements include, without limitation, any statement that may predict, forecast, indicate or imply future events or achievements. Actual events or results may differ materially from those contemplated in this blog. Forward-looking statements are only predictions and are subject to risks, uncertainties and assumptions that are difficult to predict, including those described in the “Risk Factors” section of our Annual Reports on Form 10-K, Quarterly Reports on Form 10-Q and other documents filed by us from time to time with the SEC. Forward-looking statements speak only as of the date they are made. Readers are cautioned not to put undue reliance on forward-looking statements, and no person assumes any obligation to update or revise any such forward-looking statements, whether as a result of new information, future events or otherwise.

Tags: data centers, AI, AI infrastructure

Recent Posts

- The Golden Cable Initiative: Enabling the Cable Partner Ecosystem at Hyperscale Speed

- Marvell Named to America’s Best Midsize Employers 2026 Ranking

- Ripple Effects: Why Water Risk Is the Next Major Business Challenge for the Semiconductor Industry

- Boosting AI with CXL Part III: Faster Time-to-First-Token

- Marvell Wins Interconnect Product of the Year for Ara 3nm 1.6T PAM4 DSP

Archives

Categories

- 5G (10)

- AI (52)

- Cloud (24)

- Coherent DSP (12)

- Company News (108)

- Custom Silicon Solutions (11)

- Data Center (77)

- Data Processing Units (21)

- Enterprise (24)

- ESG (12)

- Ethernet Adapters and Controllers (11)

- Ethernet PHYs (3)

- Ethernet Switching (41)

- Fibre Channel (10)

- Marvell Government Solutions (2)

- Networking (46)

- Optical Modules (20)

- Security (6)

- Server Connectivity (37)

- SSD Controllers (6)

- Storage (23)

- Storage Accelerators (4)

- What Makes Marvell (48)

Copyright © 2026 Marvell, All rights reserved.

- Terms of Use

- Privacy Policy

- Contact