- PRODUCTS

- COMPANY

- SUPPORT

- PRODUCTS

- BY TYPE

- BY MARKET

- COMPANY

- SUPPORT

RoCE or iWARP for Low Latency?

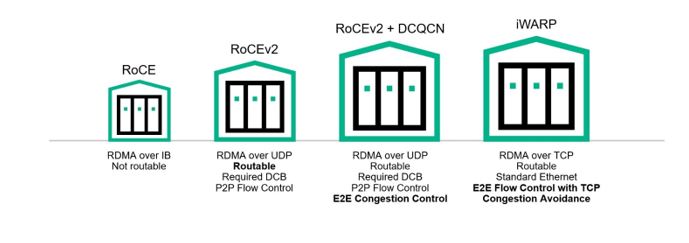

Today, Remote Direct Memory Access (RDMA) is primarily being utilized within high performance computing or cloud environments to reduce latency across the network. Enterprise customers will soon require low latency networking that RDMA offers so that they can address a variety of different applications, such as Oracle and SAP, and also implement software-defined storage using Windows Storage Spaces Direct (S2D) or VMware vSAN. There are three protocols that can be used in RDMA deployment: RDMA over InfiniBand, RDMA over Converged Ethernet (RoCE), and RDMA over iWARP. Given that there are several possible routes to go down, how do you ensure you pick the right protocol for your specific tasks?

In the enterprise sector, Ethernet is by far the most popular transport technology. Consequently, we can ignore the InfiniBand option, as it would require a forklift upgrade to the I/O existing infrastructure - thus making it way too costly for the vast majority of enterprise data centers. So, that just leaves RoCE and iWARP. Both can provide low latency connectivity over Ethernet networks. But which is right for you?

Let’s start by looking at the fundamental differences between these two protocols. RoCE is the most popular of the two and is already being used by many cloud hyper-scale customers worldwide. RDMA enabled adapters running RoCE are available from a variety of vendors including Marvell.

RoCE provides latency at the adapter in the 1-5us range but requires a lossless Ethernet network to achieve low latency operation. This means that the Ethernet switches integrated into the network must support data center bridging and priority flow control mechanisms so that lossless traffic is maintained. It is likely they will therefore have to be reconfigured to use RoCE. The challenge with the lossless or converged Ethernet environment is that configuration is a complex process and scalability can be very limited in a modern enterprise context.  Now it is not impossible to use RoCE at scale but to do so requires the implementation of additional traffic congestion control mechanisms, like Data Center Quantized Congestion Notification (DCQCN), which in turn calls for large, highly-experienced teams of network engineers and administrators. Though this is something that hyper-scale customers have access to, not all enterprise customers can say the same. Their human resources and financial budgets can be more limited.

Now it is not impossible to use RoCE at scale but to do so requires the implementation of additional traffic congestion control mechanisms, like Data Center Quantized Congestion Notification (DCQCN), which in turn calls for large, highly-experienced teams of network engineers and administrators. Though this is something that hyper-scale customers have access to, not all enterprise customers can say the same. Their human resources and financial budgets can be more limited.

Going back through the history of converged Ethernet environments, one must look no further than Fibre Channel over Converged Ethernet (FCoE) to see the size of the challenge involved. Five years ago, many analysts and industry experts claimed FCoE would replace Fibre Channel in the data center. That simply didn’t happen because of the complexity associated with using converged Ethernet networks at scale. FCoE still survives, but only in closed environments like HPE BladeSystem or HPE Synergy servers, where the network properties and scale are carefully controlled. These are single-hop environments with only a few connections in each system.

Finally, we come to iWARP. This came on the scene after RoCE and has the advantage of running on today’s standard TCP/IP networks. It provides latency at the adapter in the range of 10-15us. This is higher than what one can achieve by implementing RoCE but is still orders of magnitude below that of standard Ethernet adapters.

They say, if all you have is a hammer, then everything looks like a nail. That’s the same when it comes to vendors touting their RDMA-enabled adapters. Most vendors only support one protocol, so of course that is the protocol they will recommend. Here at Marvell, we are unique in that with our Universal RDMA technology, a customer can use both RoCE and iWARP on the same adapter. This gives us more credibility when making recommendations and means that we are effectively protocol agnostic. It is really important from a customer standpoint, as it means that we look at what is the best fit for their application criteria.

So which RDMA protocol do you use when? Well, when latency is the number one criteria and scalability is not a concern, the choice should be RoCE. You will see RoCE implemented as the back-end network in modern disk arrays, between the controller node and NVMe drives. You will also find RoCE deployed within a rack or where there is only one or two top-of-rack switches and subnets to contend with. Conversely, when latency is a key requirement, but ease-of-use and scalability are also high priorities, iWARP is the best candidate. It runs on the existing network infrastructure and can easily scale between racks and even long distances across data centers. A great use case for iWARP is as the network connectivity option for Microsoft Storage Spaces Direct implementations.

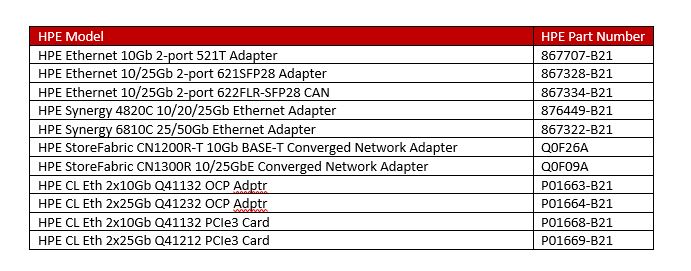

The good news for enterprise customers is that several Marvell® FastLinQ® Ethernet Adapters from HPE support Universal RDMA, so they can take advantage of low latency RDMA in the way that best suits them. Here’s a list of HPE Ethernet adapters that currently support both RoCE and iWARP RDMA.  With RDMA-enabled adapters for HPE ProLiant, Apollo, HPE Synergy and HPE Cloudline servers, Marvell has a strong portfolio of 10Gb or 25GbE connectivity solutions for data centers. In addition to supporting low latency RDMA, these adapters are also NVMe-ready. This means they can accommodate NVMe over Ethernet fabrics running RoCE or iWARP, as well as supporting NVMe over TCP (with no RDMA). They are a great choice for future-proofing the data center today for the workloads of tomorrow.

With RDMA-enabled adapters for HPE ProLiant, Apollo, HPE Synergy and HPE Cloudline servers, Marvell has a strong portfolio of 10Gb or 25GbE connectivity solutions for data centers. In addition to supporting low latency RDMA, these adapters are also NVMe-ready. This means they can accommodate NVMe over Ethernet fabrics running RoCE or iWARP, as well as supporting NVMe over TCP (with no RDMA). They are a great choice for future-proofing the data center today for the workloads of tomorrow.

For more information on these and other Marvell I/O technologies for HPE, go to www.marvell.com/hpe.

If you’d like to talk with one of our I/O experts in the field, you’ll find contact info here.

Recent Posts

- The Golden Cable Initiative: Enabling the Cable Partner Ecosystem at Hyperscale Speed

- Marvell Named to America’s Best Midsize Employers 2026 Ranking

- Ripple Effects: Why Water Risk Is the Next Major Business Challenge for the Semiconductor Industry

- Boosting AI with CXL Part III: Faster Time-to-First-Token

- Marvell Wins Interconnect Product of the Year for Ara 3nm 1.6T PAM4 DSP

Archives

Categories

- 5G (10)

- AI (52)

- Cloud (24)

- Coherent DSP (12)

- Company News (108)

- Custom Silicon Solutions (11)

- Data Center (77)

- Data Processing Units (21)

- Enterprise (24)

- ESG (12)

- Ethernet Adapters and Controllers (11)

- Ethernet PHYs (3)

- Ethernet Switching (41)

- Fibre Channel (10)

- Marvell Government Solutions (2)

- Networking (46)

- Optical Modules (20)

- Security (6)

- Server Connectivity (37)

- SSD Controllers (6)

- Storage (23)

- Storage Accelerators (4)

- What Makes Marvell (48)

Copyright © 2026 Marvell, All rights reserved.

- Terms of Use

- Privacy Policy

- Contact