- PRODUCTS

- COMPANY

- SUPPORT

- PRODUCTS

- BY TYPE

- BY MARKET

- COMPANY

- SUPPORT

NVMe-based Work Fabrics Blow Through Legacy Rotational Media Limitations in the Data Center: Speed and Cost Benefits of NVMe SSD Shared Storage Now in Its Second Generation

Marvell Debuts 88SS1092 Second-Gen NVM Express SSD Controller at OCP Summit

SSDs in the Data Center: NVMe and Where We’ve Been

When solid-state drives (SSDs) were first introduced into the data center, the infrastructure mandated they work within the confines of the then current bus technology, such as Serial ATA (SATA) and Serial Attached SCSI (SAS), developed for rotational media. Even the fastest hard disk drives (HDDs) of course, couldn’t keep up with an SSD, but neither could their current pipelines, which created a bottleneck that hampered the full exploitation of SSD technology. PCI Express (PCIe) offered a suitable high-bandwidth bus technology already in place as a transport layer for networking, graphics and other add-in cards. It became the next viable option, but the PCIe interface still relied on old HDD-based SCSI or SATA protocols. Thus the NVM Express (NVMe) industry working group was formed to create a standardized set of protocols and commands developed for the PCIe bus, in order to allow multiple paths that could take advantage of the full benefits of SSDs in the data center. The NVMe specification was designed from the ground up to deliver high-bandwidth and low-latency storage access for current and future NVM technologies.

The NVMe interface provides an optimized command issue and completion path. It includes support for parallel operation by supporting up to 64K commands within a single I/O queue to the device. Additionally, support was added for many Enterprise capabilities like end-to-end data protection (compatible with T10 DIF and DIX standards), enhanced error reporting and virtualization. All-in-all, NVMe is a scalable host controller interface designed to address the needs of Enterprise, Data Center and Client systems that utilize PCIe-based solid-state drives to help maximize SSD performance.

SSD Network Fabrics

New NVMe controllers from companies like Marvell allowed the data center to share storage data to further maximize cost and performance efficiencies. By creating SSD network fabrics, a cluster of SSDs can be formed to pool storage from individual servers and maximize overall data center storage. In addition, by creating a common enclosure for additional servers, data can be transported for shared data access. These new compute models therefore allow data centers to not only fully optimize the fast performance of SSDs, but more economically deploy those SSDs throughout the data center, lowering overall cost and streamlining maintenance. Instead of adding additional SSDs to individual servers, under-deployed SSDs can be tapped into and redeployed for use by over-allocated servers.

Here’s a simple example of how these network fabrics work: If a system has ten servers, each with an SSD sitting on the PCIe bus, an SSD cluster can be formed from each of the SSDs to provide not only a means for additional storage, but also a method to pool and share data access. If, let’s say one server is only 10 percent utilized, while another is over allocated, that SSD cluster will allow more storage for the over-allocated server without having to add SSDs to the individual servers. When the example is multiplied by hundreds of servers, you can see that cost, maintenance and performance efficiencies skyrocket.

Marvell helped pave the way for these new types of compute models for the data center when it introduced its first NVMe SSD controller. That product supported up to four lanes of PCIe 3.0, and was suitable for full 4GB/s or 2GB/s end points depending on host system customization. It enabled unparalleled IOPS performance using the NVMe advanced Command Handling. In order to fully utilize the high-speed PCIe connection, Marvell’s innovative NVMe design facilitated PCIe link data flows by deploying massive hardware automation. This helped to alleviate the legacy host control bottlenecks and unleash the true Flash performance.

Second-Generation NVMe Controllers are Here!

This first product has now been followed up with the introduction of the Marvell 88SS1092 second-generation NVMe SSD controller, which has passed through in-house SSD validation and third-party OS/platform compatibility testing. Therefore, the Marvell® 88SS1092 is ready to go to boost next-generation Storage and Datacenter systems, and is being debuted at the Open Computing Project (OCP) Summit March 8 and 9 in San Jose, Calif.

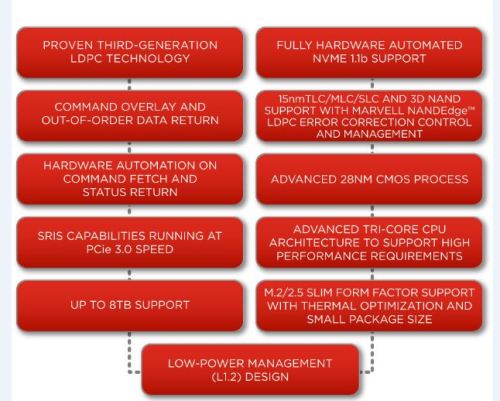

The Marvell 88SS1092 is Marvell's second-generation NVMe SSD controller capable of PCIe 3.0 X 4 end points to provide full 4GB/s interface to the host and help remove performance bottlenecks. While the new controller advances a solid-state storage system to a more fully flash-optimized architecture for greater performance, it also includes Marvell's third-generation error-correcting, low-density parity check (LDPC) technology for the additional reliability enhancement, endurance boost and TLC NAND device support on top of MLC NAND.

Today, the speed and cost benefits of NVMe SSD shared storage is not only a reality, but is now in its second generation. The network paradigm has been shifted. By using the NVMe protocol, designed from the ground up to exploit the full performance of SSDs, new compute models are being created without the limitations of legacy rotational media. SSD performance can be maximized, while SSD clusters and new network fabrics enable pooled storage and shared data access. The hard work of the NVMe working group is becoming a reality for today’s data center, as new controllers and technology help optimize performance and cost efficiencies of SSD technology.

Recent Posts

- The Golden Cable Initiative: Enabling the Cable Partner Ecosystem at Hyperscale Speed

- Marvell Named to America’s Best Midsize Employers 2026 Ranking

- Ripple Effects: Why Water Risk Is the Next Major Business Challenge for the Semiconductor Industry

- Boosting AI with CXL Part III: Faster Time-to-First-Token

- Marvell Wins Interconnect Product of the Year for Ara 3nm 1.6T PAM4 DSP

Archives

Categories

- 5G (10)

- AI (52)

- Cloud (24)

- Coherent DSP (12)

- Company News (108)

- Custom Silicon Solutions (11)

- Data Center (77)

- Data Processing Units (21)

- Enterprise (24)

- ESG (12)

- Ethernet Adapters and Controllers (11)

- Ethernet PHYs (3)

- Ethernet Switching (41)

- Fibre Channel (10)

- Marvell Government Solutions (2)

- Networking (46)

- Optical Modules (20)

- Security (6)

- Server Connectivity (37)

- SSD Controllers (6)

- Storage (23)

- Storage Accelerators (4)

- What Makes Marvell (48)

Copyright © 2026 Marvell, All rights reserved.

- Terms of Use

- Privacy Policy

- Contact