By Maen Suleiman, Senior Software Product Line Manager, Marvell

Thanks to its collaboration with leading players in the OpenWrt and security space, Marvell will be able to show those attending the OpenWrt Summit (Prague, Czech Republic, 26-27th October) new beneficial developments with regard to its Marvell ARMADA® multi-core processors. In collaboration with contributors Sartura and Sentinel, these developments will be demonstrated on Marvell’s portfolio of networking community boards that support the 64-bit Arm® based Marvell ARMADA processor devices, by running the increasingly popular and highly versatile OpenWrt operating system, plus the latest advances in security software. We expect these new offerings will assist engineers in mitigating the major challenges they face when constructing next-generation customer-premises equipment (CPE) and uCPE platforms.

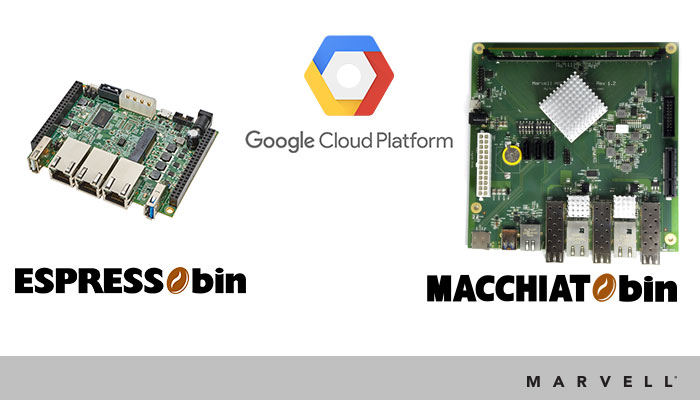

On display at the event at both the Sentinel and Sartura booths will be examples of the Marvell MACCHIATObin™ board (with a quad-core ARMADA 8040 that can deliver up to 2GHz operation) and the Marvell ESPRESSObin™ board (with a dual-core ARMADA 3700 lower power processor running at 1.2GHz).

The boards located at the Sartura booth will demonstrate the open source OpenWrt offering of the Marvell MACCHIATObin/ESPRESSObin platforms and will show how engineers can benefit from this company’s OpenWrt integration capabilities. The capabilities have proven invaluable in helping engineers expedite their development projects more quickly and allow the full realization of initial goals set for such projects. The Sartura team can take engineers’ original CPE designs incorporating ARMADA and provide production level software needed for inclusion in end products.

Marvell will also have MACCHIATObin/ESPRESSObin boards demonstrated at the Sentinel booth. These will feature highly optimized security software. Using this security software, companies looking to employ ARMADA based hardware in their designs will be able to ensure that they have ample protection against the threat posed by malware and harmful files - like WannaCry and Nyetya ransomware, as well as Petya malware, etc. This protection relies upon Sentinel’s File Validation Service (FVS), which inspects all HTTP, POP and IMAP files as they pass through the device toward the client. Any files deemed to be malicious are then blocked. This security technology is very well suited to CPE networking infrastructure and edge computing, as well as IoT deployments. Sentinel’s FVS technology can also be implemented on vCPE/uCPE as a security virtual network function (VNF), in addition to native implementation over physical CPEs - providing similar protection levels due to its extremely lightweight architecture and very low latency. FVS is responsible for identifying download requests and subsequently analyzing the data being downloaded. This software package can run on all Linux-based embedded operating systems for CPE and NFV devices which meet minimum hardware requirements and offer the necessary features.

Through collaborations such as those described above, Marvell is building an extensive ecosystem around its ARMADA products. As a result, Marvell will be able to support future development of secure, high performance CPE and uCPE/vCPE systems that exhibit much greater differentiation.

By Tim Lau, Senior Director Automotive Product Management, Marvell

The automobile is encountering possibly the biggest changes in its technological progression since the invention of the internal combustion engine nearly 150 years ago. Increasing levels of autonomy will reshape how we think about cars and car travel. It won't be just a matter of getting from point A to point B while doing very little else -- we will be able to keep on doing what we want while in the process of getting there.

As it is, the modern car already incorporates large quantities of complex electronics - making sure the ride is comfortable, the engine runs smoothly and efficiently, and providing infotainment for the driver and passengers. In addition, the features and functionality being incorporated into vehicles we are now starting to buy are no longer of a fixed nature. It is increasingly common for engine control and infotainment systems to require updates over the course of the vehicle's operational lifespan.

Such an update is the one issue that proved instrumental in first bringing Ethernet connectivity into the vehicle domain. Leading automotive brands, such as BMW and VW, found they could dramatically increase the speed of uploads performed by mechanics at service centers by installing small Ethernet networks into the chassis of their vehicle models instead of trying to use the established, but much slower, Controller Area Network (CAN) bus. As a result, transfer times were cut from hours to minutes.

As an increasing number of upgradeable Electronic Control Units (ECUs) have appeared (thereby putting greater strain on existing in-vehicle networking technology), the Ethernet network has itself expanded. In response, the semiconductor industry has developed solutions that have made the networking standard, which was initially developed for the relatively electrically clean environment of the office, much more robust and suitable for the stringent requirements of automobile manufacturers. The CAN and Media Oriented Systems Transport (MOST) buses have persisted as the main carriers of real-time information for in-vehicle electronics - although, now, they are beginning to fade as Ethernet evolves into a role as the primary network inside the car, being used for both real-time communications and updating tasks.

In an environment where implementation of weight savings are crucial to improving fuel economy, the ability to have communications run over a single network (especially one that needs just a pair of relatively light copper cables) is a huge operational advantage. In addition, a small connector footprint is vital in the context of increasing deployment of sensors (such as cameras, radar and LiDAR transceivers), which are now being mounted all around the car for driver assistance/semi-autonomous driving purposes. This is supported by the adoption of unshielded, twisted-pair cabling.

Image sensing, radar and LiDAR functions will all produce copious amounts of data. So data-transfer capacity is going to be a critical element of in-vehicle Ethernet networks, now and into the future. The industry has responded quickly by first delivering 100 Mbit/s transceivers and following up with more capacious standards-compliant 1000 Mbit/s offerings.

But providing more bandwidth is simply not enough on its own. So that car manufacturers do not need to sacrifice the real-time behavior necessary for reliable control, the relevant international standards committees have developed protocols to guarantee the timely delivery of data. Time Sensitive Networking (TNS) provides applications with the ability to use reserved bandwidth on virtual channels in order to ensure delivery within a predictable timeframe. Less important traffic can make use of the best-effort service of conventional Ethernet with the remaining unreserved bandwidth.

The industry’s more forward-thinking semiconductor vendors, Marvell among them, have further enhanced real-time performance with features such as Deep Packet Inspection (DPI), employing Ternary Content-Addressable Memory (TCAM), in their automotive-optimized Ethernet switches. The DPI mechanism makes it possible for hardware to look deep into each packet as it arrives at a switch input and instantly decide exactly how the message should be handled. The packet inspection supports real-time debugging processes by trapping messages of a certain type, and markedly reduces application latency experienced within the deployment by avoiding processor intervention.

Support from remote management frames is another significant protocol innovation in automotive Ethernet. These frames make it possible for a system controller to control the switch state directly. For example, a system controller can automatically power down I/O ports when they are not needed - a feature that preserves precious battery life.

The result of these adaptations to the core Ethernet standard, as well as the increased resilience it now delivers, is the emergence of an expansive feature set that is well positioned for the ongoing transformation of the car, taking it from just being a mode of transportation into the data-rich, autonomous mobile platform it is envisaged to become in the future.

By Prabhu Loganathan, Senior Director of Marketing for Connectivity Business Unit, Marvell

Standardized in 1997, Wi-Fi has changed the way that we compute. Today, almost every one of us uses a Wi-Fi connection on a daily basis, whether it's for watching a show on a tablet at home, using our laptops at work, or even transferring photos from a camera. Millions of Wi-Fi-enabled products are being shipped each week, and it seems this technology is constantly finding its way into new device categories.

Since its humble beginnings, Wi-Fi has progressed at a rapid pace. While the initial standard allowed for just 2 Mbit/s data rates, today's Wi-Fi implementations allow for speeds in the order of Gigabits to be supported. This last in our three part blog series covering the history of Wi-Fi will look at what is next for the wireless standard.

Gigabit Wireless

The latest 802.11 wireless technology to be adopted at scale is 802.11ac. It extends 802.11n, enabling improvements specifically in the 5.8 GHz band, with 802.11n technology used in the 2.4 GHz band for backwards compatibility.

By sticking to the 5.8 GHz band, 802.11ac is able to benefit from a huge 160 Hz channel bandwidth which would be impossible in the already crowded 2.4 GHz band. In addition, beamforming and support for up to 8 MIMO streams raises the speeds that can be supported. Depending on configuration, data rates can range from a minimum of 433 Mbit/s to multiple Gigabits in cases where both the router and the end-user device have multiple antennas.

If that's not fast enough, the even more cutting edge 802.11ad standard (which is now starting to appear on the market) uses 60 GHz ‘millimeter wave’ frequencies to achieve data rates up to 7 Gbit/s, even without MIMO propagation. The major catch with this is that at 60 GHz frequencies, wireless range and penetration are greatly reduced.

Looking Ahead

Now that we've achieved Gigabit speeds, what's next? Besides high speeds, the IEEE 802.11 working group has recognized that low speed, power efficient communication is in fact also an area with a great deal of potential for growth. While Wi-Fi has traditionally been a relatively power-hungry standard, the upcoming protocols will have attributes that will allow it to target areas like the Internet of Things (IoT) market with much more energy efficient communication.

20 Years and Counting

Although it has been around for two whole decades as a standard, Wi-Fi has managed to constantly evolve and keep up with the times. From the dial-up era to broadband adoption, to smartphones and now as we enter the early stages of IoT, Wi-Fi has kept on developing new technologies to adapt to the needs of the market. If history can be used to give us any indication, then it seems certain that Wi-Fi will remain with us for many years to come.

By Jeroen Dorgelo, Director of Strategy, Storage Group, Marvell

The dirty little secret of flash drives today is that many of them are running on yesterday’s interfaces. While SATA and SAS have undergone several iterations since they were first introduced, they are still based on decades-old concepts and were initially designed with rotating disks in mind. These legacy protocols are bottlenecking the potential speeds possible from today’s SSDs.

NVMe is the latest storage interface standard designed specifically for SSDs. With its massively parallel architecture, it enables the full performance capabilities of today’s SSDs to be realized. Because of price and compatibility, NVMe has taken a while to see uptake, but now it is finally coming into its own.

Serial Attached Legacy

Currently, SATA is the most common storage interface. Whether a hard drive or increasingly common flash storage, chances are it is running through a SATA interface. The latest generation of SATA - SATA III – has a 600 MB/s bandwidth limit. While this is adequate for day-to-day consumer applications, it is not enough for enterprise servers. Even I/O intensive consumer use cases, such as video editing, can run into this limit.

The SATA standard was originally released in 2000 as a serial-based successor to the older PATA standard, a parallel interface. SATA uses the advanced host controller interface (AHCI) which has a single command queue with a depth of 32 commands. This command queuing architecture is well-suited to conventional rotating disk storage, though more limiting when used with flash.

Whereas SATA is the standard storage interface for consumer drives, SAS is much more common in the enterprise world. Released originally in 2004, SAS is also a serial replacement to an older parallel standard SCSI. Designed for enterprise applications, SAS storage is usually more expensive to implement than SATA, but it has significant advantages over SATA for data center use - such as longer cable lengths, multipath IO, and better error reporting. SAS also has a higher bandwidth limit of 1200MB/s.

Just like SATA, SAS, has a single command queue, although the queue depth of SAS goes to 254 commands instead of 32 commands. While the larger command queue and higher bandwidth limit make it better performing than SATA, SAS is still far from being the ideal flash interface.

NVMe - Massive Parallelism

Introduced in 2011, NVMe was designed from the ground up for addressing the needs of flash storage. Developed by a consortium of storage companies, its key objective is specifically to overcome the bottlenecks on flash performance imposed by SATA and SAS.

Whereas SATA is restricted to 600MB/s and SAS to 1200MB/s (as mentioned above), NVMe runs over the PCIe bus and its bandwidth is theoretically limited only by the PCIe bus speed. With current PCIe standards providing 1GB/s or more per lane, and PCIe connections generally offering multiple lanes, bus speed almost never represents a bottleneck for NVMe-based SSDs.

NVMe is designed to deliver massive parallelism, offering 64,000 command queues, each with a queue depth of 64,000 commands. This parallelism fits in well with the random access nature of flash storage, as well as the multi-core, multi-threaded processors in today’s computers. NVMe’s protocol is streamlined, with an optimized command set that does more in fewer operations compared to AHCI. IO operations often need fewer commands than with SATA or SAS, allowing latency to be reduced. For enterprise customers, NVMe also supports many enterprise storage features, such as multi-path IO and robust error reporting and management.

Pure speed and low latency, plus the ability to deal with high IOPs have made NVMe SSDs a hit in enterprise data centers. Companies that particularly value low latency and high IOPs, such as high-frequency trading firms and database and web application hosting companies, have been some of the first and most avid endorsers of NVMe SSDs.

Barriers to Adoption

While NVMe is high performance, historically speaking it has also been considered relatively high cost. This cost has negatively affected its popularity in the consumer-class storage sector. Relatively few operating systems supported NVMe when it first came out, and its high price made it less attractive for ordinary consumers, many of whom could not fully take advantage of its faster speeds anyway.

However, all this is changing. NVMe prices are coming down and, in some cases, achieving price parity with SATA drives. This is due not only to market forces but also to new innovations, such as DRAM-less NVMe SSDs.

As DRAM is a significant bill of materials (BoM) cost for SSDs, DRAM-less SSDs are able to achieve lower, more attractive price points. Since NVMe 1.2, host memory buffer (HMB) support has allowed DRAM-less SSDs to borrow host system memory as the SSD’s DRAM buffer for better performance. DRAM-less SSDs that take advantage of HMB support can achieve performance similar to that of DRAM-based SSDs, while simultaneously saving cost, space and energy.

NVMe SSDs are also more power-efficient than ever. While the NVMe protocol itself is already efficient, the PCIe link it runs over can consume significant levels of idle power. Newer NVMe SSDs support highly efficient, autonomous sleep state transitions, which allow them to achieve energy consumption on par or lower than SATA SSDs.

All this means that NVMe is more viable than ever for a variety of use cases, from large data centers that can save on capital expenditures due to lower cost SSDs and operating expenditures as a result of lower power consumption, as well as power-sensitive mobile/portable applications such as laptops, tablets and smartphones, which can now consider using NVMe.

Addressing the Need for Speed

While the need for speed is well recognized in enterprise applications, is the speed offered by NVMe actually needed in the consumer world? For anyone who has ever installed more memory, bought a larger hard drive (or SSD), or ordered a faster Internet connection, the answer is obvious.

Today’s consumer use cases generally do not yet test the limits of SATA drives, and part of the reason is most likely because SATA is still the most common interface for consumer storage. Today’s video recording and editing, gaming and file server applications are already pushing the limits of consumer SSDs, and tomorrow’s use cases are only destined to push them further. With NVMe now achieving price points that are comparable with SATA, there is no reason not to build future-proof storage today.

By Aviad Enav Zagha, Sr. Director Embedded Processors Product Line Manager, Networking Group, Marvell

Though the projections made by market analysts still differ to a considerable degree, there is little doubt about the huge future potential that implementation of Internet of Things (IoT) technology has within an enterprise context. It is destined to lead to billions of connected devices being in operation, all sending captured data back to the cloud, from which analysis can be undertaken or actions initiated. This will make existing business/industrial/metrology processes more streamlined and allow a variety of new services to be delivered.

Though the projections made by market analysts still differ to a considerable degree, there is little doubt about the huge future potential that implementation of Internet of Things (IoT) technology has within an enterprise context. It is destined to lead to billions of connected devices being in operation, all sending captured data back to the cloud, from which analysis can be undertaken or actions initiated. This will make existing business/industrial/metrology processes more streamlined and allow a variety of new services to be delivered.

With large numbers of IoT devices to deal with in any given enterprise network, the challenges of efficiently and economically managing them all without any latency issues, and ensuring that elevated levels of security are upheld, are going to prove daunting. In order to put the least possible strain on cloud-based resources, we believe the best approach is to divest some intelligence outside the core and place it at the enterprise edge, rather than following a purely centralized model. This arrangement places computing functionality much nearer to where the data is being acquired and makes a response to it considerably easier. IoT devices will then have a local edge hub that can reduce the overhead of real-time communication over the network. Rather than relying on cloud servers far away from the connected devices to take care of the ‘heavy lifting’, these activities can be done closer to home. Deterministic operation is maintained due to lower latency, bandwidth is conserved (thus saving money), and the likelihood of data corruption or security breaches is dramatically reduced.

Sensors and data collectors in the enterprise, industrial and smart city segments are expected to generate more than 1GB per day of information, some needing a response within a matter of seconds. Therefore, in order for the network to accommodate the large amount of data, computing functionalities will migrate from the cloud to the network edge, forming a new market of edge computing.

In order to accelerate the widespread propagation of IoT technology within the enterprise environment, Marvell now supports the multifaceted Google Cloud IoT Core platform. Cloud IoT Core is a fully managed service mechanism through which the management and secure connection of devices can be accomplished on the large scales that will be characteristic of most IoT deployments.

Through its IoT enterprise edge gateway technology, Marvell is able to provide the necessary networking and compute capabilities required (as well as the prospect of localized storage) to act as mediator between the connected devices within the network and the related cloud functions. By providing the control element needed, as well as collecting real-time data from IoT devices, the IoT enterprise gateway technology serves as a key consolidation point for interfacing with the cloud and also has the ability to temporarily control managed devices if an event occurs that makes cloud services unavailable. In addition, the IoT enterprise gateway can perform the role of a proxy manager for lightweight, rudimentary IoT devices that (in order to keep power consumption and unit cost down) may not possess any intelligence. Through the introduction of advanced ARM®-based community platforms, Marvell is able to facilitate enterprise implementations using Cloud IoT Core. The recently announced Marvell MACCHIATObin™ and Marvell ESPRESSObin™ community boards support open source applications, local storage and networking facilities. At the heart of each of these boards is Marvell’s high performance ARMADA® system-on-chip (SoC) that supports Google Cloud IoT Core Public Beta.

Via Cloud IoT Core, along with other related Google Cloud services (including Pub/Sub, Dataflow, Bigtable, BigQuery, Data Studio), enterprises can benefit from an all-encompassing IoT solution that addresses the collection, processing, evaluation and visualization of real-time data in a highly efficient manner. Cloud IoT Core features certificate-based authentication and transport layer security (TLS), plus an array of sophisticated analytical functions.

Over time, the enterprise edge is going to become more intelligent. Consequently, mediation between IoT devices and the cloud will be needed, as will cost-effective processing and management. With the combination of Marvell’s proprietary IoT gateway technology and Google Cloud IoT Core, it is now possible to migrate a portion of network intelligence to the enterprise edge, leading to various major operational advantages.

Please visit MACCHIATObin Wiki and ESPRESSObin Wiki for instructions on how to connect to Google’s Cloud IoT Core Public Beta platform.