By Todd Owens, Field Marketing Director, Marvell

At Cavium, we provide adapters that support a variety of protocols for connecting servers to shared storage including iSCSI, Fibre Channel over Ethernet (FCoE) and native Fibre Channel (FC). One of the questions we get quite often is which protocol is best for connecting servers to shared storage? The answer is, it depends.

We can simplify the answer by eliminating FCoE, as it has proven to be a great protocol for converging the edge of the network (server to top-of-rack switch), but not really effective for multi-hop connectivity, taking servers through a network to shared storage targets. That leaves us with iSCSI and FC.

Typically, people equate iSCSI with lower cost and ease of deployment because it works on the same kind of Ethernet network that servers and clients are already running on. These same folks equate FC as expensive and complex, requiring special adapters, switches and a “SAN Administrator” to make it all work.

This may have been the case in the early days of shared storage, but things have changed as the intelligence and performance of the storage network environment has evolved. What customers need to do is look at the reality of what they need from a shared storage environment and make a decision based on cost, performance and manageability. For this blog, I’m going to focus on these three criteria and compare 10Gb Ethernet (10GbE) with iSCSI hardware offload and 16Gb Fibre Channel (16GFC).

Before we crunch numbers, let me start by saying that shared storage requires a dedicated network, regardless of the protocol. The idea that iSCSI can be run on the same network as the server and client network traffic may be feasible for small or medium environments with just a couple of servers, but for any environment with mission-critical applications or with say four or more servers connecting to a shared storage device, a dedicated storage network is strongly advised to increase reliability and eliminate performance problems related to network issues.

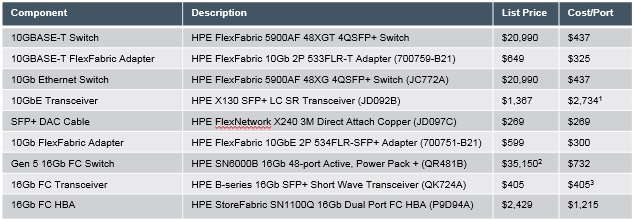

Now that we have that out of the way, let’s start by looking at the cost difference between iSCSI and FC. We have to take into account the costs of the adapters, optics/cables and switch infrastructure. Here’s the list of Hewlett Packard Enterprise (HPE) components I will use in the analysis. All prices are based on published HPE list prices.

Notes: 1. Optical transceiver needed at both adapter and switch ports for 10GbE networks. Thus cost/port is two times the transceiver cost 2. FC switch pricing includes full featured management software and licenses 3. FC Host Bus Adapters (HBAs) ship with transceivers, thus only one additional transceiver is needed for the switch port

Notes: 1. Optical transceiver needed at both adapter and switch ports for 10GbE networks. Thus cost/port is two times the transceiver cost 2. FC switch pricing includes full featured management software and licenses 3. FC Host Bus Adapters (HBAs) ship with transceivers, thus only one additional transceiver is needed for the switch port

So if we do the math, the cost per port looks like this:

10GbE iSCSI with SFP+ Optics = $437+$2,734+$300 = $3,471

10GbE iSCSI with 3 meter Direct Attach Cable (DAC) =$437+$269+300 = $1,006

16GFC with SFP+ Optics = $773 + $405 + $1,400 = $2,578

So iSCSI is the lowest price if DAC cables are used. Note, in my example, I chose 3 meter cable length, but even if you choose shorter or longer cables (HPE supports from 0.65 to 7 meter cable lengths), this is still the lowest cost connection option. Surprisingly, the cost of the 10GbE optics makes the iSCSI solution with optical connections the most expensive configuration. When using fiber optic cables, the 16GFC configuration is lower cost.

So what are the trade-offs with DAC versus SFP+ options? It really comes down to distance and the number of connections required. The DAC cables can only span up to 7 meters or so. That means customers have only limited reach within or across racks. If customers have multiple racks or distance requirements of more than 7 meters, FC becomes the more attractive option, from a cost perspective. Also, DAC cables are bulky, and when trying to cable more than 10 ports or more, the cable bundles can become unwieldy to deal with.

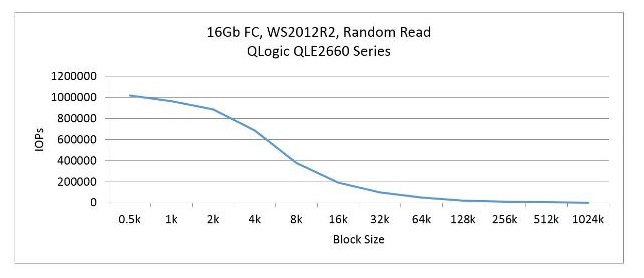

On the performance side, let’s look at the differences. iSCSI adapters have impressive specifications of 10Gbps bandwidth and 1.5Million IOPS which offers very good performance. For FC, we have 16Gbps of bandwidth and 1.3Million IOPS. So FC has more bandwidth and iSCSI can deliver slightly more transactions. Well, that is, if you take the specifications at face value. If you dig a little deeper here’s some things we learn:

Figure 1: Cavium’s iSCSI Hardware Offload IOPS Performance

Figure 2: Cavium’s QLogic 16Gb FC IOPS performance

If we look at manageability, this is where things have probably changed the most. Keep in mind, Ethernet network management hasn’t really changed much. Network administrators create virtual LANs (vLANs) to separate network traffic and reduce congestion. These network administrators have a variety of tools and processes that allow them to monitor network traffic, run diagnostics and make changes on the fly when congestion starts to impact application performance. The same management approach applies to the iSCSI network and can be done by the same network administrators.

On the FC side, companies like Cavium and HPE have made significant improvements on the software side of things to simplify SAN deployment, orchestration and management. Technologies like fabric-assigned port worldwide name (FA-WWN) from Cavium and Brocade enable the SAN administrator to configure the SAN without having HBAs available and allow a failed server to be replaced without having to reconfigure the SAN fabric. Cavium and Brocade have also teamed up to improve the FC SAN diagnostics capability with Gen 5 (16Gb) Fibre Channel fabrics by implementing features such as Brocade ClearLink™ diagnostics, Fibre Chanel Ping (FC ping) and Fibre Channel traceroute (FC traceroute), link cable beacon (LCB) technology and more. HPE’s Smart SAN for HPE 3PAR provides the storage administrator the ability to zone the fabric and map the servers and LUNs to an HPE 3PAR StoreServ array from the HPE 3PAR StoreServ management console.

Another way to look at manageability is in the number of administrators on staff. In many enterprise environments, there are typically dozens of network administrators. In those same environments, there may be less than a handful of “SAN” administrators. Yes, there are lots of LAN connected devices that need to be managed and monitored, but so few for SAN connected devices. The point is it doesn’t take an army to manage a SAN with today’s FC management software from vendors like Brocade.

So what is the right answer between FC and iSCSI? Well, it depends. If application performance is the biggest criteria, it’s hard to beat the combination of bandwidth, IOPS and latency of the 16GFC SAN. If compatibility and commonality with existing infrastructures is a critical requirement, 10GbE iSCSI is a good option (assuming the 10GbE infrastructure exists in the first place). If security is a key concern, FC is the best choice. When is the last time you heard of a FC network being hacked into? And if cost is the key criteria, iSCSI with DAC or 10GBASE-T connection is a good choice, understanding the tradeoff in latency and bandwidth performance.

So in very general terms, FC is the best choice for enterprise customers who need high performance, mission-critical capability, high reliability and scalable shared storage connectivity. For smaller customers who are more cost sensitive, iSCSI is a great alternative. iSCSI is also a good protocol for pre-configure systems like hyper-converged storage solutions to provide simple connectivity to existing infrastructure.

As a wise manager once told me many years ago, “If you start with the customer and work backwards, you can’t go wrong.” So the real answer is understand what the customer needs and design the best solution to meet those needs based on the information above.