By Michael Kanellos, Head of Influencer Relations, Marvell

The opportunity for custom silicon isn’t just getting larger – it’s becoming more diverse.

At the Custom AI Investor Event, Marvell executives outlined how the push to advance accelerated infrastructure is driving surging demand for custom silicon – reshaping the customer base, product categories and underlying technologies. (Here is a link to the recording and presentation slides.)

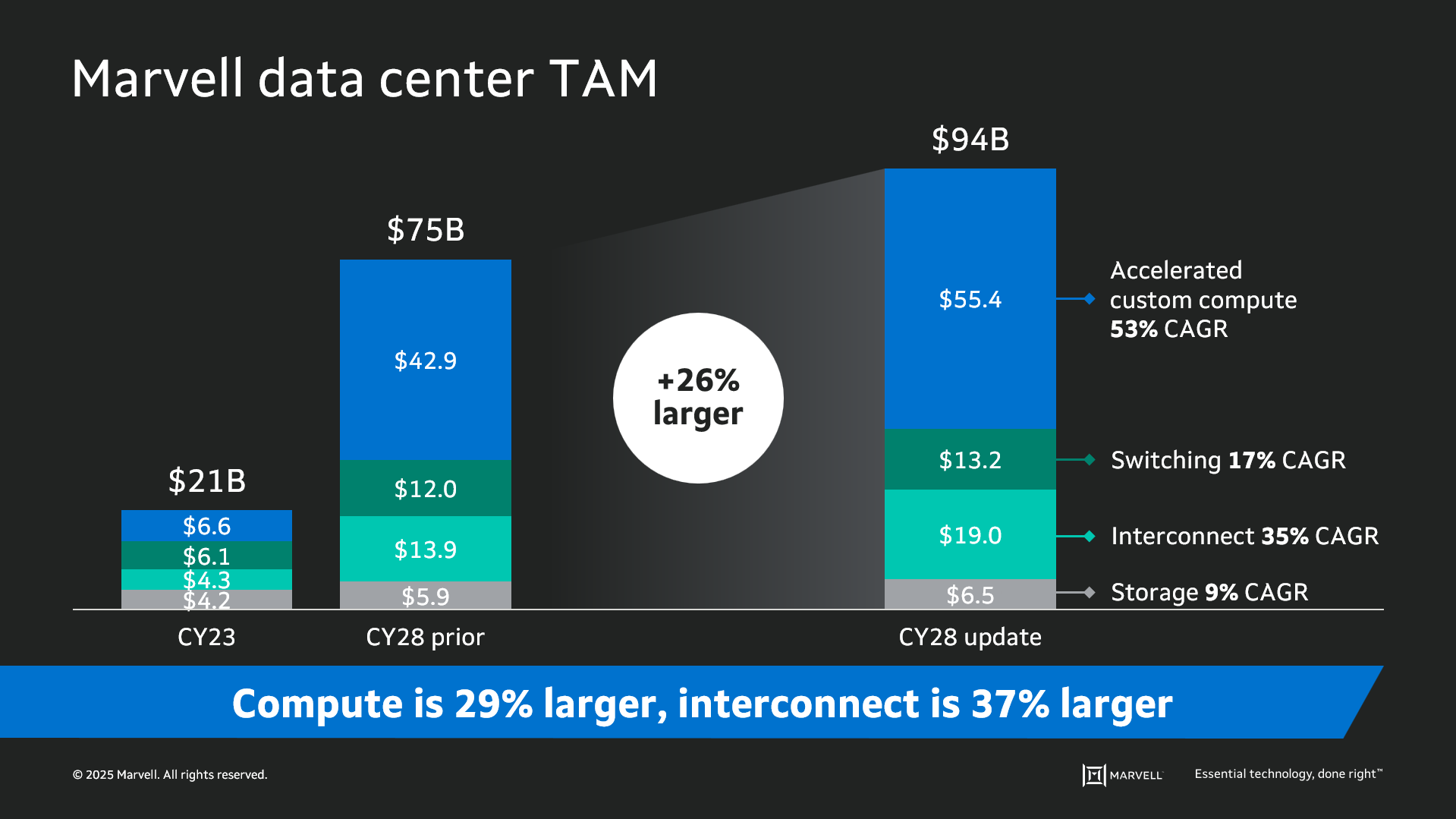

Data infrastructure spending is now slated to surpass $1 trillion by 20281 with the Marvell total addressable market (TAM) for data center semiconductors rising to $94 billion by then, 26% larger than the year before. Of that total, $55.4 billion revolves around custom devices for accelerated compute1. In fact, the forecast for every major product segment has risen in the past year, underscoring the growing momentum behind custom silicon.

The deeper you go into the numbers, the more compelling the story becomes. The custom market is evolving into two distinct elements: the XPU segment, focused on optimized CPUs and accelerators, and the XPU attach segment that includes PCIe retimers, co-processors, CPO components, CXL controllers and other devices that serve to increase the utilization and performance of the entire system. Meanwhile, the TAM for custom XPUs is expected to reach $40.8 billion by 2028, growing at a 47% CAGR1.

By Kristin Hehir, Senior Manager, PR and Marketing, Marvell

Powered by the Marvell® Bravera™ SC5 controller, Memblaze developed the PBlaze 7 7940 GEN5 SSD family, delivering an impressive 2.5 times the performance and 1.5 times the power efficiency compared to conventional PCIe 4.0 SSDs and ~55/9us read/write latency1. This makes the SSD ideal for business-critical applications and high-performance workloads like machine learning and cloud computing. In addition, Memblaze utilized the innovative sustainability features of Marvell’s Bravera SC5 controllers for greater resource efficiency, reduced environmental impact and streamlined development efforts and inventory management.

Powered by the Marvell® Bravera™ SC5 controller, Memblaze developed the PBlaze 7 7940 GEN5 SSD family, delivering an impressive 2.5 times the performance and 1.5 times the power efficiency compared to conventional PCIe 4.0 SSDs and ~55/9us read/write latency1. This makes the SSD ideal for business-critical applications and high-performance workloads like machine learning and cloud computing. In addition, Memblaze utilized the innovative sustainability features of Marvell’s Bravera SC5 controllers for greater resource efficiency, reduced environmental impact and streamlined development efforts and inventory management.

By Todd Owens, Field Marketing Director, Marvell

While Fibre Channel (FC) has been around for a couple of decades now, the Fibre Channel industry continues to develop the technology in ways that keep it in the forefront of the data center for shared storage connectivity. Always a reliable technology, continued innovations in performance, security and manageability have made Fibre Channel I/O the go-to connectivity option for business-critical applications that leverage the most advanced shared storage arrays.

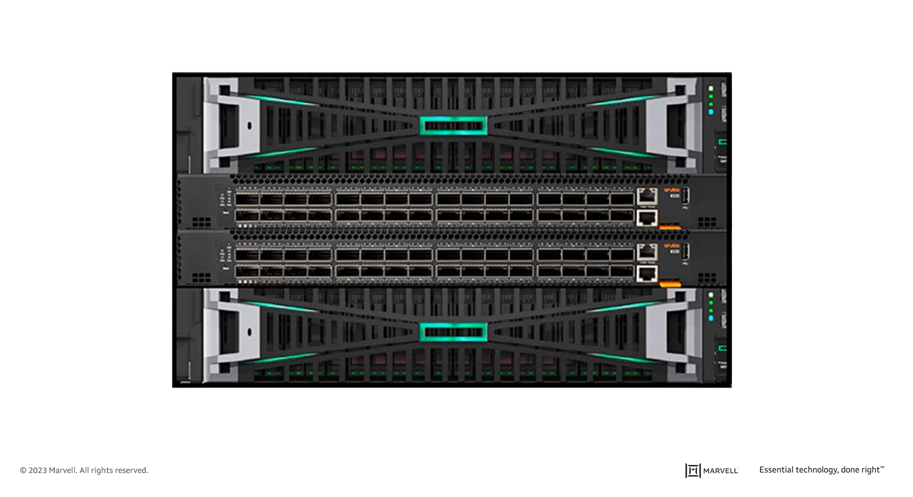

A recent development that highlights the progress and significance of Fibre Channel is Hewlett Packard Enterprise’s (HPE) recent announcement of their latest offering in their Storage as a Service (SaaS) lineup with 32Gb Fibre Channel connectivity. HPE GreenLake for Block Storage MP powered by HPE Alletra Storage MP hardware features a next-generation platform connected to the storage area network (SAN) using either traditional SCSI-based FC or NVMe over FC connectivity. This innovative solution not only provides customers with highly scalable capabilities but also delivers cloud-like management, allowing HPE customers to consume block storage any way they desire – own and manage, outsource management, or consume on demand. HPE GreenLake for Block Storage powered by Alletra Storage MP

HPE GreenLake for Block Storage powered by Alletra Storage MP

At launch, HPE is providing FC connectivity for this storage system to the host servers and supporting both FC-SCSI and native FC-NVMe. HPE plans to provide additional connectivity options in the future, but the fact they prioritized FC connectivity speaks volumes of the customer demand for mature, reliable, and low latency FC technology.

By Amir Bar-Niv, VP of Marketing, Automotive Business Unit, Marvell and John Heinlein, Chief Marketing Officer, Sonatus and Simon Edelhaus, VP SW, Automotive Business Unit, Marvell

The software-defined vehicle (SDV) is one of the newest and most interesting megatrends in the automotive industry. As we discussed in a previous blog, the reason that this new architectural—and business—model will be successful is the advantages it offers to all stakeholders:

What is a software-defined vehicle? While there is no official definition, the term reflects the change in the way software is being used in vehicle design to enable flexibility and extensibility. To better understand the software-defined vehicle, it helps to first examine the current approach.

Today’s embedded control units (ECUs) that manage car functions do include software, however, the software in each ECU is often incompatible with and isolated from other modules. When updates are required, the vehicle owner must visit the dealer service center, which inconveniences the owner and is costly for the manufacturer.

By Kishore Atreya, Director of Product Management, Marvell

Recently the Linux Foundation hosted its annual ONE Summit for open networking, edge projects and solutions. For the first time, this year’s event included a “mini-summit” for SONiC, an open source networking operating system targeted for data center applications that’s been widely adopted by cloud customers. A variety of industry members gave presentations, including Marvell’s very own Vijay Vyas Mohan, who presented on the topic of Extensible Platform Serdes Libraries. In addition, the SONiC mini-summit included a hackathon to motivate users and developers to innovate new ways to solve customer problems.

So, what could we hack?

At Marvell, we believe that SONiC has utility not only for the data center, but to enable solutions that span from edge to cloud. Because it’s a data center NOS, SONiC is not optimized for edge use cases. It requires an expensive bill of materials to run, including a powerful CPU, a minimum of 8 to 16GB DDR, and an SSD. In the data center environment, these HW resources contribute less to the BOM cost than do the optics and switch ASIC. However, for edge use cases with 1G to 10G interfaces, the cost of the processor complex, primarily driven by the NOS, can be a much more significant contributor to overall system cost. For edge disaggregation with SONiC to be viable, the hardware cost needs to be comparable to that of a typical OEM-based solution. Today, that’s not possible.