By Amir Bar-Niv, VP of Marketing, Automotive Business Unit, Marvell and John Bergen, Sr. Product Marketing Manager, Automotive Business Unit, Marvell

In the early decades of American railroad construction, competing companies laid their tracks at different widths. Such inconsistent standards drove inefficiencies, preventing the easy exchange of rolling stock from one railroad to the next, and impeding the infrastructure from coalescing into a unified national network. Only in the 1860s, when a national standard emerged – 4 feet, 8-1/2 inches – did railroads begin delivering their true, networked potential.

Some one hundred-and-sixty years later, as Marvell and its competitors race to reinvent the world’s transportation networks, universal design standards are more important than ever. Recently, Marvell’s 88Q5050 Ethernet Device Bridge became the first of its type in the automotive industry to receive Avnu certification, meeting exacting new technical standards that facilitate the exchange of information between diverse in-car networks, which enable today’s data-dependent vehicles to operate smoothly, safely and reliably.

By Gidi Navon, Senior Principal Architect, Marvell

The current environment and an expected “new normal” are driving the transition to a borderless enterprise that must support increasing performance requirements and evolving business models. The infrastructure is seeing growth in the number of endpoints (including IoT) and escalating demand for data such as high-definition content. Ultimately, wired and wireless networks are being stretched as data-intensive applications and cloud migrations continue to rise.

By Todd Owens, Field Marketing Director, Marvell

At Cavium, we provide adapters that support a variety of protocols for connecting servers to shared storage including iSCSI, Fibre Channel over Ethernet (FCoE) and native Fibre Channel (FC). One of the questions we get quite often is which protocol is best for connecting servers to shared storage? The answer is, it depends.

We can simplify the answer by eliminating FCoE, as it has proven to be a great protocol for converging the edge of the network (server to top-of-rack switch), but not really effective for multi-hop connectivity, taking servers through a network to shared storage targets. That leaves us with iSCSI and FC.

Typically, people equate iSCSI with lower cost and ease of deployment because it works on the same kind of Ethernet network that servers and clients are already running on. These same folks equate FC as expensive and complex, requiring special adapters, switches and a “SAN Administrator” to make it all work.

This may have been the case in the early days of shared storage, but things have changed as the intelligence and performance of the storage network environment has evolved. What customers need to do is look at the reality of what they need from a shared storage environment and make a decision based on cost, performance and manageability. For this blog, I’m going to focus on these three criteria and compare 10Gb Ethernet (10GbE) with iSCSI hardware offload and 16Gb Fibre Channel (16GFC).

Before we crunch numbers, let me start by saying that shared storage requires a dedicated network, regardless of the protocol. The idea that iSCSI can be run on the same network as the server and client network traffic may be feasible for small or medium environments with just a couple of servers, but for any environment with mission-critical applications or with say four or more servers connecting to a shared storage device, a dedicated storage network is strongly advised to increase reliability and eliminate performance problems related to network issues.

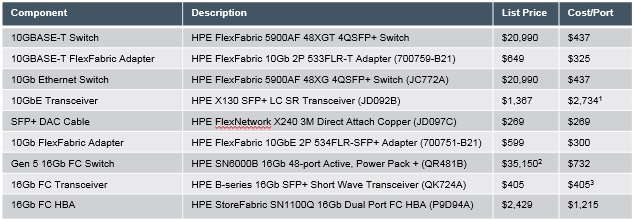

Now that we have that out of the way, let’s start by looking at the cost difference between iSCSI and FC. We have to take into account the costs of the adapters, optics/cables and switch infrastructure. Here’s the list of Hewlett Packard Enterprise (HPE) components I will use in the analysis. All prices are based on published HPE list prices.

Notes: 1. Optical transceiver needed at both adapter and switch ports for 10GbE networks. Thus cost/port is two times the transceiver cost 2. FC switch pricing includes full featured management software and licenses 3. FC Host Bus Adapters (HBAs) ship with transceivers, thus only one additional transceiver is needed for the switch port

Notes: 1. Optical transceiver needed at both adapter and switch ports for 10GbE networks. Thus cost/port is two times the transceiver cost 2. FC switch pricing includes full featured management software and licenses 3. FC Host Bus Adapters (HBAs) ship with transceivers, thus only one additional transceiver is needed for the switch port

So if we do the math, the cost per port looks like this:

10GbE iSCSI with SFP+ Optics = $437+$2,734+$300 = $3,471

10GbE iSCSI with 3 meter Direct Attach Cable (DAC) =$437+$269+300 = $1,006

16GFC with SFP+ Optics = $773 + $405 + $1,400 = $2,578

So iSCSI is the lowest price if DAC cables are used. Note, in my example, I chose 3 meter cable length, but even if you choose shorter or longer cables (HPE supports from 0.65 to 7 meter cable lengths), this is still the lowest cost connection option. Surprisingly, the cost of the 10GbE optics makes the iSCSI solution with optical connections the most expensive configuration. When using fiber optic cables, the 16GFC configuration is lower cost.

So what are the trade-offs with DAC versus SFP+ options? It really comes down to distance and the number of connections required. The DAC cables can only span up to 7 meters or so. That means customers have only limited reach within or across racks. If customers have multiple racks or distance requirements of more than 7 meters, FC becomes the more attractive option, from a cost perspective. Also, DAC cables are bulky, and when trying to cable more than 10 ports or more, the cable bundles can become unwieldy to deal with.

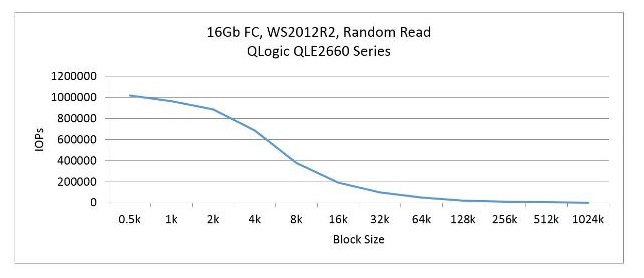

On the performance side, let’s look at the differences. iSCSI adapters have impressive specifications of 10Gbps bandwidth and 1.5Million IOPS which offers very good performance. For FC, we have 16Gbps of bandwidth and 1.3Million IOPS. So FC has more bandwidth and iSCSI can deliver slightly more transactions. Well, that is, if you take the specifications at face value. If you dig a little deeper here’s some things we learn:

Figure 1: Cavium’s iSCSI Hardware Offload IOPS Performance

Figure 2: Cavium’s QLogic 16Gb FC IOPS performance

If we look at manageability, this is where things have probably changed the most. Keep in mind, Ethernet network management hasn’t really changed much. Network administrators create virtual LANs (vLANs) to separate network traffic and reduce congestion. These network administrators have a variety of tools and processes that allow them to monitor network traffic, run diagnostics and make changes on the fly when congestion starts to impact application performance. The same management approach applies to the iSCSI network and can be done by the same network administrators.

On the FC side, companies like Cavium and HPE have made significant improvements on the software side of things to simplify SAN deployment, orchestration and management. Technologies like fabric-assigned port worldwide name (FA-WWN) from Cavium and Brocade enable the SAN administrator to configure the SAN without having HBAs available and allow a failed server to be replaced without having to reconfigure the SAN fabric. Cavium and Brocade have also teamed up to improve the FC SAN diagnostics capability with Gen 5 (16Gb) Fibre Channel fabrics by implementing features such as Brocade ClearLink™ diagnostics, Fibre Chanel Ping (FC ping) and Fibre Channel traceroute (FC traceroute), link cable beacon (LCB) technology and more. HPE’s Smart SAN for HPE 3PAR provides the storage administrator the ability to zone the fabric and map the servers and LUNs to an HPE 3PAR StoreServ array from the HPE 3PAR StoreServ management console.

Another way to look at manageability is in the number of administrators on staff. In many enterprise environments, there are typically dozens of network administrators. In those same environments, there may be less than a handful of “SAN” administrators. Yes, there are lots of LAN connected devices that need to be managed and monitored, but so few for SAN connected devices. The point is it doesn’t take an army to manage a SAN with today’s FC management software from vendors like Brocade.

So what is the right answer between FC and iSCSI? Well, it depends. If application performance is the biggest criteria, it’s hard to beat the combination of bandwidth, IOPS and latency of the 16GFC SAN. If compatibility and commonality with existing infrastructures is a critical requirement, 10GbE iSCSI is a good option (assuming the 10GbE infrastructure exists in the first place). If security is a key concern, FC is the best choice. When is the last time you heard of a FC network being hacked into? And if cost is the key criteria, iSCSI with DAC or 10GBASE-T connection is a good choice, understanding the tradeoff in latency and bandwidth performance.

So in very general terms, FC is the best choice for enterprise customers who need high performance, mission-critical capability, high reliability and scalable shared storage connectivity. For smaller customers who are more cost sensitive, iSCSI is a great alternative. iSCSI is also a good protocol for pre-configure systems like hyper-converged storage solutions to provide simple connectivity to existing infrastructure.

As a wise manager once told me many years ago, “If you start with the customer and work backwards, you can’t go wrong.” So the real answer is understand what the customer needs and design the best solution to meet those needs based on the information above.

By By Christopher Mash, Senior Director of Automotive Applications & Architecture, Marvell

The in-vehicle networks currently used in automobiles are based on a combination of several different data networking protocols, some of which have been in place for decades. There is the controller area network (CAN), which takes care of the powertrain and related functions; the local interconnect network (LIN), which is predominantly used for passenger/driver comfort purposes that are not time sensitive (such as climate control, ambient lighting, seat adjustment, etc.); the media oriented system transport (MOST), developed for infotainment; and FlexRay™ for anti-lock braking (ABS), electronic power steering (EPS) and vehicle stability functions.

As a result of using different protocols, gateways are needed to transfer data within the infrastructure. The resulting complexity is costly for car manufacturers. It also affects vehicle fuel economy, since the wire harnessing needed for each respective network adds extra weight to the vehicle. The wire harness represents the third heaviest element of the vehicle (after the engine and chassis) and the third most expensive, too. Furthermore, these gateways have latency issues, something that will impact safety-critical applications where rapid response is required.

The number of electronic control units (ECUs) incorporated into cars is continuously increasing, with luxury models now often having 150 or more ECUs, and even standard models are now approaching 80-90 ECUs. At the same time, data intensive applications are emerging to support advanced driver assistance system (ADAS) implementation, as we move toward greater levels of vehicle autonomy. All this is causing a significant ramp in data rates and overall bandwidth, with the increasing deployment of HD cameras and LiDAR technology on the horizon.

As a consequence, the entire approach in which in-vehicle networking is deployed needs to fundamentally change, first in terms of the topology used and, second, with regard to the underlying technology on which it relies.

Currently, the networking infrastructure found inside a car is a domain-based architecture. There are different domains for each key function - one for body control, one for infotainment, one for telematics, one for powertrain, and so on. Often these domains employ a mix of different network protocols (e.g., with CAN, LIN and others being involved).

As network complexity increases, it is now becoming clear to automotive engineers that this domain-based approach is becoming less and less efficient. Consequently, in the coming years, there will need to be a migration away from the current domain-based architecture to a zonal one.

A zonal arrangement means data from different traditional domains is connected to the same ECU, based on the location (zone) of that ECU in the vehicle. This arrangement will greatly reduce the wire harnessing required, thereby lowering weight and cost - which in turn will translate into better fuel efficiency. Ethernet technology will be pivotal in moving to zonal-based, in-vehicle networks.

In addition to the high data rates that Ethernet technology can support, Ethernet adheres to the universally-recognized OSI communication model. Ethernet is a stable, long-established and well-understood technology that has already seen widespread deployment in the data communication and industrial automation sectors. Unlike other in-vehicle networking protocols, Ethernet has a well-defined development roadmap that is targeting additional speed grades, whereas protocols – like CAN, LIN and others – are already reaching a stage where applications are starting to exceed their capabilities, with no clear upgrade path to alleviate the problem.

Future expectations are that Ethernet will form the foundation upon which all data transfer around the car will occur, providing a common protocol stack that reduces the need for gateways between different protocols (along with the hardware costs and the accompanying software overhead). The result will be a single homogeneous network throughout the vehicle in which all the protocols and data formats are consistent. It will mean that the in-vehicle network will be scalable, allowing functions that require higher speeds (10G for example) and ultra-low latency to be attended to, while also addressing the needs of lower speed functions. Ethernet PHYs will be selected according to the particular application and bandwidth demands - whether it is a 1Gbps device for transporting imaging sensing data, or one for 10Mbps operation, as required for the new class of low data rate sensors that will be used in autonomous driving.

Each Ethernet switch in a zonal architecture will be able to carry data for all the different domain activities. All the different data domains would be connected to local switches and the Ethernet backbone would then aggregate the data, resulting in a more effective use of the available resources and allowing different speeds to be supported, as required, while using the same core protocols. This homogenous network will provide ‘any data, anywhere’ in the car, supporting new applications through combining data from different domains available through the network.

Marvell is leading the way when it comes to the progression of Ethernet-based, in-vehicle networking and zonal architectures by launching, back in the summer of 2017, the AEC-Q100-compliant 88Q5050 secure Gigabit Ethernet switch for use in automobiles. This device not only deals with OSI Layers 1-2 (the physical layer and data layer) functions associated with standard Ethernet implementations, it also has functions located at OSI Layers 3,4 and beyond (the network layer, transport layer and higher), such as deep packet inspection (DPI). This, in combination with Trusted Boot functionality, provides automotive network architects with key features vital in ensuring network security.