By Khurram Malik, Senior Director of Marketing, Custom Cloud Solutions, Marvell

Can AI beat a human at the game of twenty questions? Yes.

And can a server enhanced by CXL beat an AI server without it? Yes, and by a wide margin.

While CXL technology was originally developed for general-purpose cloud servers, the technology is now finding a home in AI as a vehicle for economically and efficiently boosting the performance of AI infrastructure. To this end, Marvell has been conducting benchmark tests on different AI use cases.

In December, Marvell, Samsung and Liqid showed how Marvell® StructeraTM A CXL compute accelerators can reduce the time required for conducting vector searches (for analyzing unstructured data within documents) by more than 5x.

In February, Marvell showed how a trio of Structera A CXL compute accelerators can process more queries per second than a cutting-edge server CPU and at a lower latency while leaving the host CPU open for different computing tasks.

Today, this blog post will show how Structera CXL memory expanders can boost performance of inference tasks.

AI and Memory Expansion

Unlike CXL compute accelerators, CXL memory expanders do not contain additional processing cores for near-memory computing. Instead, they supersize memory capacity and bandwidth. Marvell Structera X, released last year, provides a path for adding up to 4TB of DDR5 DRAM or 6TB of DDR4 DRAM to servers (12TB with integrated LZ4 compression) along with 200GB/second of additional bandwidth. Multiple Structera X modules, moreover, can be added to a single server; CXL modules slot into PCIe ports rather than the more limited DIMM slots used for memory.

By Vienna Alexander, Marketing Content Professional, Marvell

In the Product of the Year Awards, Electronic Design News (EDN) named Marvell as the winner of the Interconnects category for its 3nm 1.6T PAM4 Interconnect Platform, known as Ara. Ara is the industry’s first 3nm PAM4 DSP specializing in bandwidth, power efficiency and integration for AI and data center scale-out accelerated infrastructure.

These awards are a 50-year tradition that recognizes outstanding products that demonstrate significant technological advancement, especially innovative design, or a substantial improvement in price or performance. Over 100 products were evaluated spanning 13 categories to determine the winners.

The Ara 3nm 1.6T PAM4 DSP integrates eight 200G electrical lanes and eight 200G optical lanes in a compact, standardized module form factor. Ara sets a new standard in optical interconnect technology, integrating advanced laser drivers and signal processing into a singular device, thereby reducing power per bit. With the product, system design is simplified across entire AI data center network stacks. Power consumption is also reduced, enabling denser optical connectivity and faster deployment of AI clusters.

Ara has also received other recognitions, demonstrating Marvell leadership in optical DSPs and the interconnect realm.

By Khurram Malik, Senior Director of Marketing, Custom Cloud Solutions, Marvell

While CXL technology was originally developed for general-purpose cloud servers, it is now emerging as a key enabler for boosting the performance and ROI of AI infrastructure.

The logic is straightforward. Training and inference require rapid access to massive amounts of data. However, the memory channels on today’s XPUs and CPUs struggle to keep pace, creating the so-called “memory wall” that slows processing. CXL breaks this bottleneck by leveraging available PCIe ports to deliver additional memory bandwidth, expand memory capacity and, in some cases, integrate near-memory processors. As an added advantage, CXL provides these benefits at a lower cost and lower power profile than the usual way of adding more processors.

To showcase these benefits, Marvell conducted benchmark tests across multiple use cases to demonstrate how CXL technology can elevate AI performance.

In December, Marvell and its partners showed how Marvell® StructeraTM A CXL compute accelerators can reduce the time required for vector searches used to analyze unstructured data within documents by more than 5x.

Here’s another one: CXL is deployed to lower latency.

Lower Latency? Through CXL?

At first glance, lower latency and CXL might seem contradictory. Memory connected through a CXL device sits farther from the processor than memory connected via local memory channels. With standard CXL devices, this typically results in higher latency between CXL memory and the primary processor.

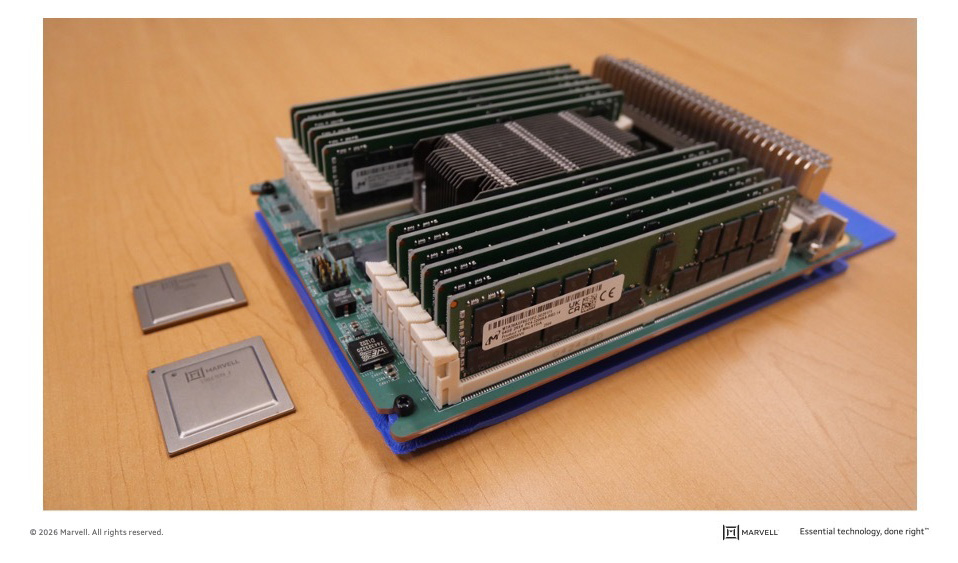

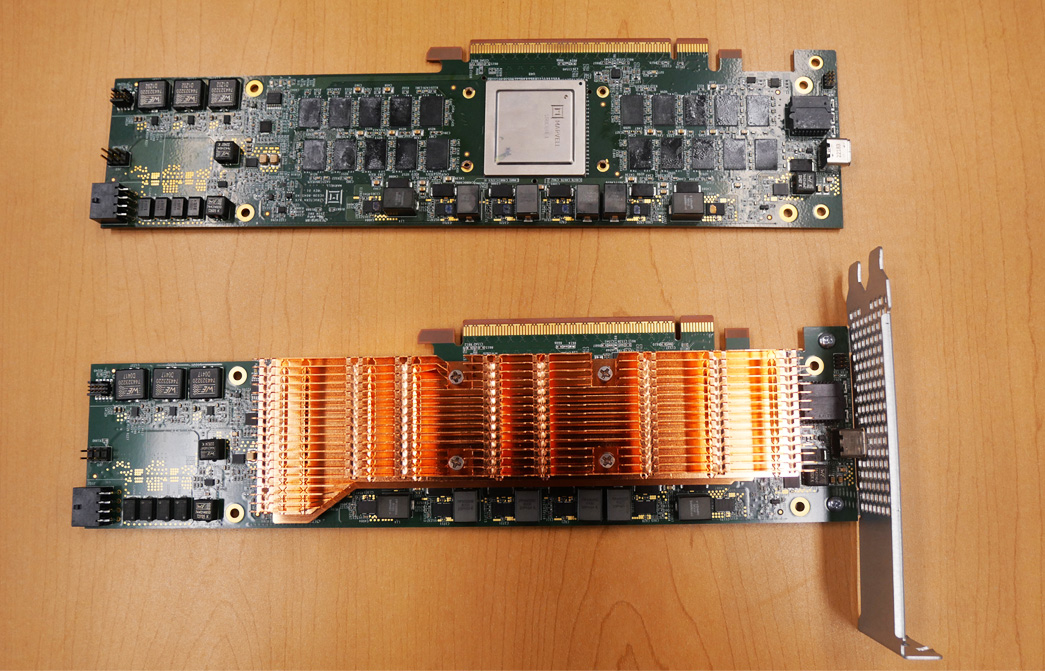

Marvell Structera A CXL memory accelerator boards with and without heat sinks.

By Vienna Alexander, Marketing Content Professional, Marvell

Recently, Marvell joined SixFive Media to discuss the vision Marvell has for compute and connectivity. Below are some of the key clips from their conversation, uncovering the continued transformation of innovation in enabling AI applications.

By Rohan Gandhi, Director of Product Management for Switching Products, Marvell

Power and space are two of the most critical resources in building AI infrastructure. That’s why Marvell is working with cabling partners and other industry experts to build a framework that enables data center operators to integrate co-packaged copper (CPC) interconnects into scale-up networks.

Unlike traditional printed circuit board (PCB) traces, CPCs aren’t embedded in circuit boards. Instead, CPCs consist of discrete ribbons or bundles of twinax cable that run alongside the board. By taking the connection out of the board, CPCs extend the reach of copper connections without the need for additional components such as equalizers or amplifiers as well as reduce interference, improve signal integrity, and lower the power budget of AI networks.

Being completely passive, CPCs can’t match the reach of active electrical cables (AECs) or optical transceivers. They extend farther than traditional direct attach copper (DAC) cables, making them an optimal solution for XPU-to-XPU connections within a tray or connecting XPUs in a tray to the backplane. Typical 800G CPC connections between processors within the same tray span a few hundred millimeters while XPU-to-backplane connections can reach 1.5 meters. Looking ahead,1.6T CPCs based around 200G lanes are expected within the next two years, followed by 3.2T solutions.

While the vision can be straightforward to describe, it involves painstaking engineering and cooperation across different ecosystems. Marvell has been cultivating partnerships to ensure a smooth transition to CPCs as well as create an environment where the technology can evolve and scale rapidly.