By Annie Liao, Product Management Director, Connectivity, Marvell

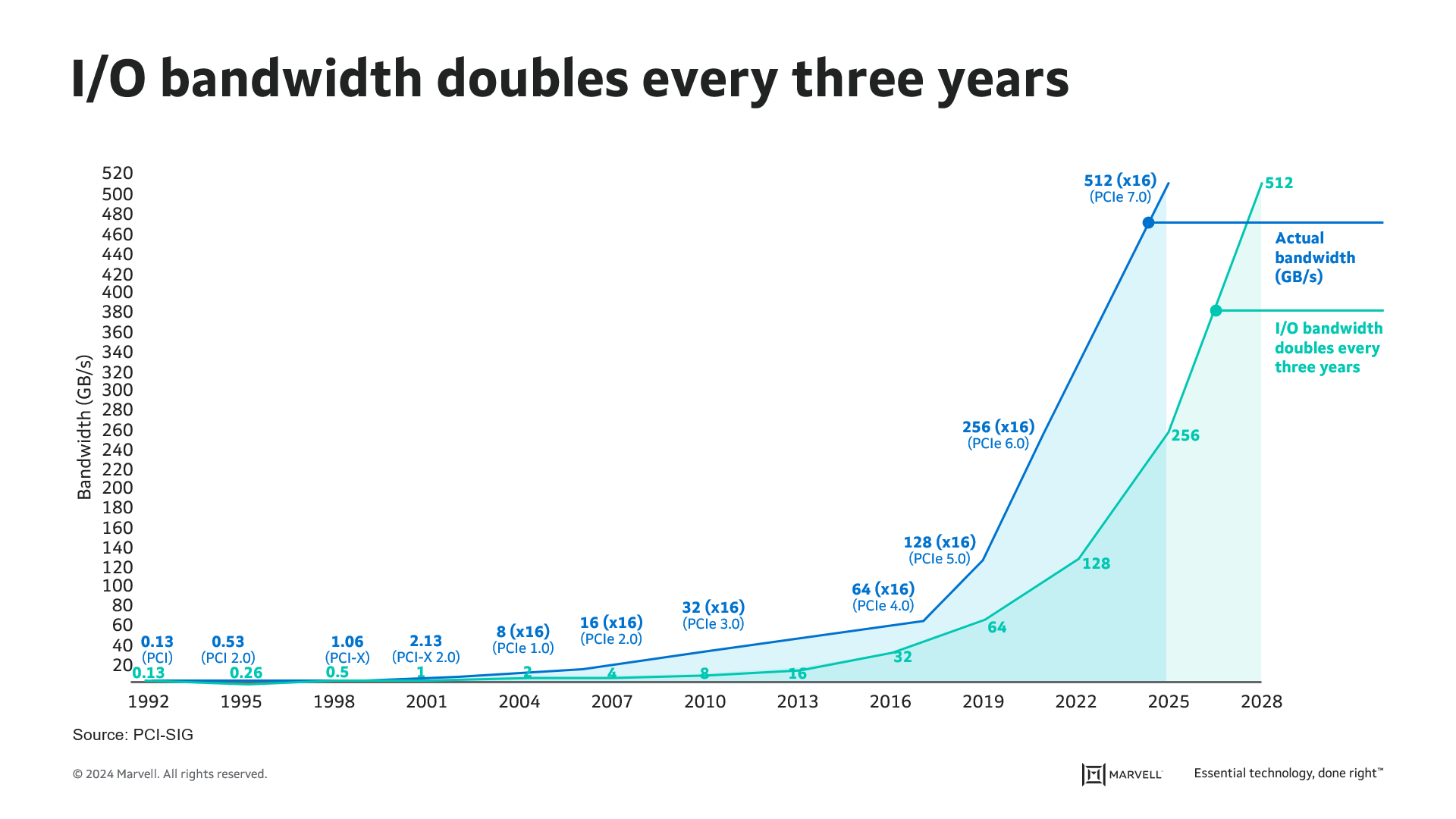

PCIe has historically been used as protocol for communication between CPU and computer subsystems. It has gradually increased speed since its debut in 2003 (PCI Express) and after 20 years of PCIe development, we are currently at PCIe Gen 5 with I/O bandwidth of 32Gbps per lane. There are many factors driving the PCIe speed increase. The most prominent ones are artificial intelligence (AI) and machine learning (ML). In order for CPU and AI Accelerators/GPUs to effectively work with each other for larger training models, the communication bandwidth of the PCIe-based interconnects between them needs to scale to keep up with the exponentially increasing size of parameters and data sets used in AI models. As the number of PCIe lanes supported increases with each generation, the physical constraints of the package beachfront and PCB routing put a limit to the maximum number of lanes in a system. This leaves I/O speed increase as the only way to push more data transactions per second. The compute interconnect bandwidth demand fueled by AI and ML is driving a faster transition to the next generation of PCIe, which is PCIe Gen 6.

PCIe has been using 2-level Non-Return-to-Zero (NRZ) modulation since its inception. Increasing PCIe speed up to Gen 5 has been achieved through doubling of the I/O speed. For Gen 6, PCI-SIG decided to adopt Pulse-Amplitude Modulation 4 (PAM4), which carries 4-level signal encoding 2 bits of data (00, 01, 10, 11). The reduced margin resulting from the transition of 2-level signaling to 4-level signaling has also necessitated the use of Forward Error Correction (FEC) protection, a first for PCIe links. With the adoptions of PAM4 signaling and FEC, Gen 6 marks an inflection point for PCIe both from signaling and protocol layer perspectives.

In addition to AI/ML, disaggregation of memory and storage is an emerging trend in compute applications that has a significant impact in the applications of PCIe based interconnect. PCIe has historically been adopted on-board and for in-chassis interconnects. Attaching more front-facing NVMe SSDs is one of the common PCIe interconnect examples. With the increasing trends toward flexible resource allocation, and the advancement of CXL technology, the server industry is now moving toward disaggregated and composable infrastructure. In this disaggregated architecture, the PCIe end points are located at different chassis away from the PCIe root complex, requiring the PCIe link to travel out of the system chassis. This is typically achieved through direct attach cables (DAC) that can range up to 3-5m.

Copyright © 2025 Marvell, All rights reserved.