By Todd Owens, Field Marketing Director, Marvell

While Fibre Channel (FC) has been around for a couple of decades now, the Fibre Channel industry continues to develop the technology in ways that keep it in the forefront of the data center for shared storage connectivity. Always a reliable technology, continued innovations in performance, security and manageability have made Fibre Channel I/O the go-to connectivity option for business-critical applications that leverage the most advanced shared storage arrays.

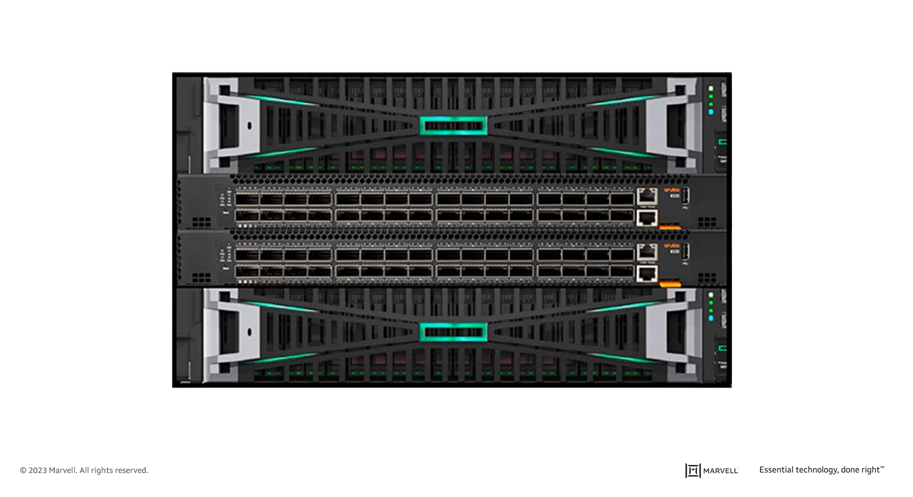

A recent development that highlights the progress and significance of Fibre Channel is Hewlett Packard Enterprise’s (HPE) recent announcement of their latest offering in their Storage as a Service (SaaS) lineup with 32Gb Fibre Channel connectivity. HPE GreenLake for Block Storage MP powered by HPE Alletra Storage MP hardware features a next-generation platform connected to the storage area network (SAN) using either traditional SCSI-based FC or NVMe over FC connectivity. This innovative solution not only provides customers with highly scalable capabilities but also delivers cloud-like management, allowing HPE customers to consume block storage any way they desire – own and manage, outsource management, or consume on demand. HPE GreenLake for Block Storage powered by Alletra Storage MP

HPE GreenLake for Block Storage powered by Alletra Storage MP

At launch, HPE is providing FC connectivity for this storage system to the host servers and supporting both FC-SCSI and native FC-NVMe. HPE plans to provide additional connectivity options in the future, but the fact they prioritized FC connectivity speaks volumes of the customer demand for mature, reliable, and low latency FC technology.

By Ian Sagan, Marvell Field Applications Engineer and Jacqueline Nguyen, Marvell Field Marketing Manager and Nick De Maria, Marvell Field Applications Engineer

Have you ever been stuck in bumper-to-bumper traffic? Frustrated by long checkout lines at the grocery store? Trapped at the back of a crowded plane while late for a connecting flight?

Such bottlenecks waste time, energy and money. And while today’s digital logjams might seem invisible or abstract by comparison, they are just as costly, multiplied by zettabytes of data struggling through billions of devices – a staggering volume of data that is only continuing to grow.

Fortunately, emerging Non-Volatile Memory Express technology (NVMe) can clear many of these digital logjams almost instantaneously, empowering system administrators to deliver quantum leaps in efficiency, resulting in lower latency and better performance. To the end user this means avoiding the dreaded spinning icon and getting an immediate response.

By Amir Bar-Niv, VP of Marketing, Automotive Business Unit, Marvell and John Bergen, Sr. Product Marketing Manager, Automotive Business Unit, Marvell

In the early decades of American railroad construction, competing companies laid their tracks at different widths. Such inconsistent standards drove inefficiencies, preventing the easy exchange of rolling stock from one railroad to the next, and impeding the infrastructure from coalescing into a unified national network. Only in the 1860s, when a national standard emerged – 4 feet, 8-1/2 inches – did railroads begin delivering their true, networked potential.

Some one hundred-and-sixty years later, as Marvell and its competitors race to reinvent the world’s transportation networks, universal design standards are more important than ever. Recently, Marvell’s 88Q5050 Ethernet Device Bridge became the first of its type in the automotive industry to receive Avnu certification, meeting exacting new technical standards that facilitate the exchange of information between diverse in-car networks, which enable today’s data-dependent vehicles to operate smoothly, safely and reliably.

By Todd Owens, Field Marketing Director, Marvell

As native Non-volatile Memory Express (NVMe®) share-storage arrays continue enhancing our ability to store and access more information faster across a much bigger network, customers of all sizes – enterprise, mid-market and SMBs – confront a common question: what is required to take advantage of this quantum leap forward in speed and capacity?

Of course, NVMe technology itself is not new, and is commonly found in laptops, servers and enterprise storage arrays. NVMe provides an efficient command set that is specific to memory-based storage, provides increased performance that is designed to run over PCIe 3.0 or PCIe 4.0 bus architectures, and -- offering 64,000 command queues with 64,000 commands per queue -- can provide much more scalability than other storage protocols.

By Todd Owens, Field Marketing Director, Marvell

Hewlett Packard Enterprise (HPE) recently updated its product naming protocol for the Ethernet adapters in its HPE ProLiant and HPE Apollo servers. Its new approach is to include the ASIC model vendor’s name in the HPE adapter’s product name. This commonsense approach eliminates the need for model number decoder rings on the part of Channel Partners and the HPE Field team and provides everyone with more visibility and clarity. This change also aligns more with the approach HPE has been taking with their “Open” adapters on HPE ProLiant Gen10 Plus servers. All of this is good news for everyone in the server sales ecosystem, including the end user. The products’ core SKU numbers remain the same, too, which is also good.

Copyright © 2025 Marvell, All rights reserved.