By Maen Suleiman, Senior Software Product Line Manager, Marvell and Gorka Garcia, Senior Lead Engineer, Marvell Semiconductor, Inc.

Thanks to the respective merits of its ARMADA® and OCTEON TX® multi-core processor offerings, Marvell is in a prime position to address a broad spectrum of demanding applications situated at the edge of the network. These applications can serve a multitude of markets that include small business, industrial and enterprise, and will require special technologies like efficient packet processing, machine learning and connectivity to the cloud. As part of its collaboration with Amazon Web Services® (AWS), Marvell will be illustrating the capabilities of edge computing applications through an exciting new demo that will be shown to attendees at Arm TechCon - which is being held at the San Jose Convention Center, October 16th-18th.

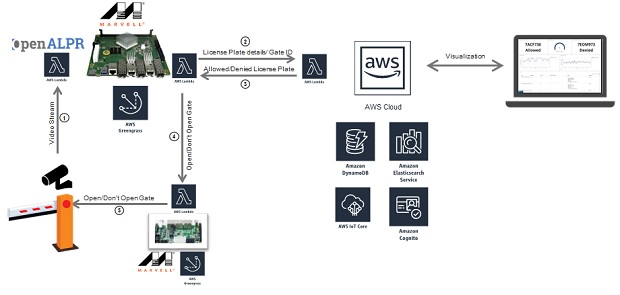

This demo takes the form of an automated parking lot. An ARMADA processor-based Marvell MACCHIATObin® community board, which integrates the AWS Greengrass® software, is used to serve as an edge compute node. The Marvell edge compute node receives video streams from two cameras that are placed at the entry gate and exit of the parking lot. The ARMADA processor-based compute node runs AWS Greengrass Core; executes two Lambda functions to process the incoming video streams and identify the vehicles entering the garage through their license plates; and subsequently checks whether the vehicles are authorized or unauthorized to enter the parking lot.

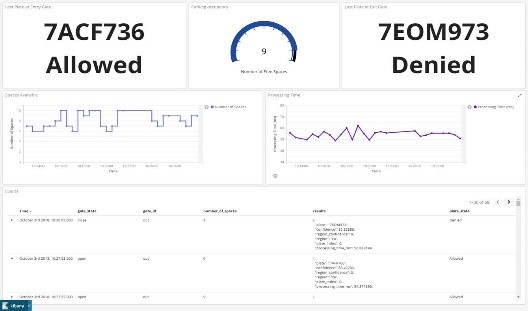

The first Lambda function will be running Automatic License Plate Recognition (OpenALPR) software and it obtains the license plate number and delivers it together with the gate ID (Entry/Exit) to a Lambda function running on the AWS® cloud that will access a DynamoDB® database. The cloud Lambda function will be responsible for reading the DynamoDB whitelist database and determines if the license plate belongs to an authorized car. This information will be sent back to a second Lambda function on the edge of the network, on the MACCHIATObin board, responsible for managing the parking lot capacity and opening or closing the gate. This Lambda function will be logging the activity in the edge to the AWS Cloud Elasticsearch® service, which works as a backend for Kibana®, an open source data visualization engine. Kibana will enable a remote operative to have direct access to information concerning parking lot occupancy, entry gate status and exit gate status. Furthermore, the AWS Cognito service authenticates users for access to Kibana.

After the AWS Cloud Lambda function sends the verdict (allowed/denied) to the second Lambda function running on the MACCHIATObin board, this MACCHIATObin Lambda function will be responsible for communicating with the gate controller, which is comprised of a Marvell ESPRESSObin® board, and is used to open/close the gateway as required.

The ESPRESSObin board runs as an AWS Greengrass IoT device that will be responsible for opening the gate according to the information received from the MACCHIATObin board’s second Lambda function.

This demo showcases the capabilities to run a machine learning algorithm using AWS Lambda at the edge to make the identification process extremely fast. This is possible through the high performance, low-power Marvell OCTEON TX and ARMADA multi-core processors. Marvell infrastructure processors’ capabilities have the potential to cover a range of higher-end networking and security applications that can benefit from the maturity of the Arm® ecosystem and the ability to run machine learning in a multi-core environment at the edge of the network.

Those visiting the Arm Infrastructure Pavilion (Booth# 216) at Arm TechCon (San Jose Convention Center, October 16th-18th) will be able to see the Marvell Edge Computing demo powered by AWS Greengrass.

For information on how to enable AWS Greengrass on Marvell MACCHIATObin and Marvell ESPRESSObin community boards, please visit http://wiki.macchiatobin.net/tiki-index.php?page=AWS+Greengrass+on+MACCHIATObin and http://wiki.espressobin.net/tiki-index.php?page=AWS+Greengrass+on+ESPRESSObin.

By Maen Suleiman, Senior Software Product Line Manager, Marvell

The adoption of multi-gigabit networks and planned roll-out of next generation 5G networks will continue to create greater available network bandwidth as more and more computing and storage services get funneled to the cloud. Increasingly, applications running on IoT and mobile devices connected to the network are becoming more intelligent and compute-intensive. However, with so many resources being channeled to the cloud, there is strain on today’s networks.

Instead of following a conventional cloud centralized model, next generation architecture will require a much greater proportion of its intelligence to be distributed throughout the network infrastructure. High performance computing hardware (accompanied by the relevant software), will need to be located at the edge of the network. A distributed model of operation should provide the needed compute and security functionality required for edge devices, enable compelling real-time services and overcome inherent latency issues for applications like automotive, virtual reality and industrial computing. With these applications, analytics of high resolution video and audio content is also needed.

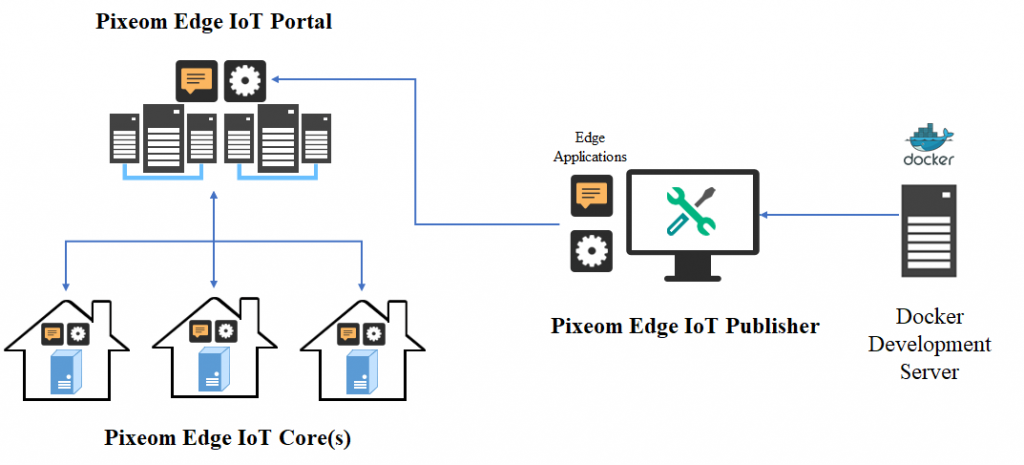

Through use of its high performance ARMADA® embedded processors, Marvell is able to demonstrate a highly effective solution that will facilitate edge computing implementation on the Marvell MACCHIATObin™ community board using the ARMADA 8040 system on chip (SoC). At CES® 2018, Marvell and Pixeom teams will be demonstrating a fully effective, but not costly, edge computing system using the Marvell MACCHIATObin community board in conjunction with the Pixeom Edge Platform to extend functionality of Google Cloud Platform™ services at the edge of the network. The Marvell MACCHIATObin community board will run Pixeom Edge Platform software that is able to extend the cloud capabilities by orchestrating and running Docker container-based micro-services on the Marvell MACCHIATObin community board.

Currently, the transmission of data-heavy, high resolution video content to the cloud for analysis purposes places a lot of strain on network infrastructure, proving to be both resource-intensive and also expensive. Using Marvell’s MACCHIATObin hardware as a basis, Pixeom will demonstrate its container-based edge computing solution which provides video analytics capabilities at the network edge. This unique combination of hardware and software provides a highly optimized and straightforward way to enable more processing and storage resources to be situated at the edge of the network. The technology can significantly increase operational efficiency levels and reduce latency.

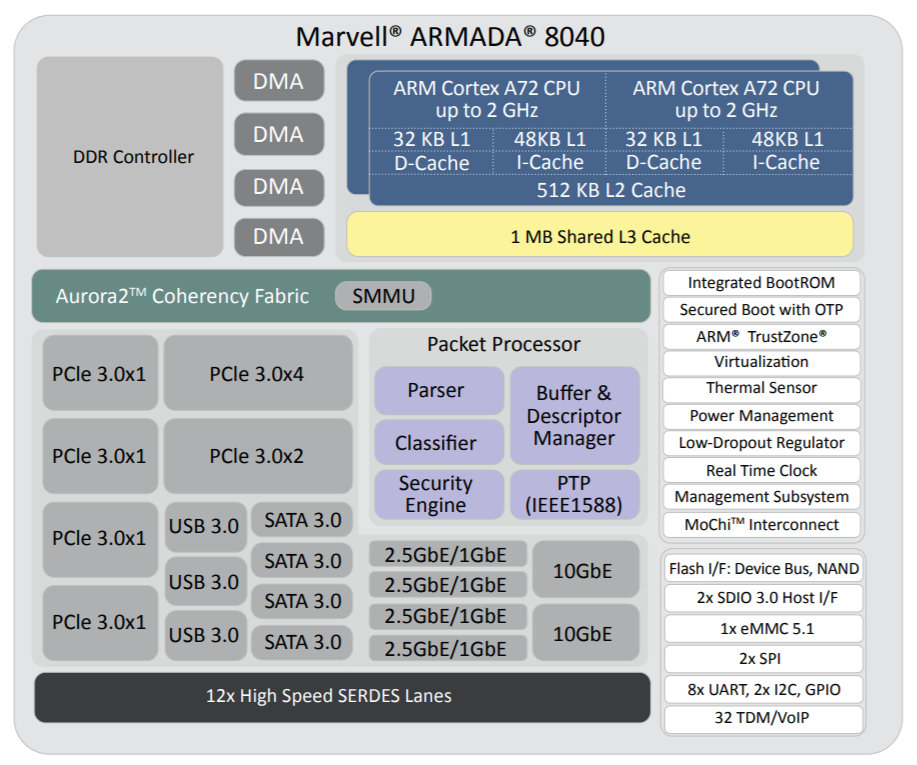

The Marvell and Pixeom demonstration deploys Google TensorFlow™ micro-services at the network edge to enable a variety of different key functions, including object detection, facial recognition, text reading (for name badges, license plates, etc.) and intelligent notifications (for security/safety alerts). This technology encompasses the full scope of potential applications, covering everything from video surveillance and autonomous vehicles, right through to smart retail and artificial intelligence. Pixeom offers a complete edge computing solution, enabling cloud service providers to package, deploy, and orchestrate containerized applications at scale, running on premise “Edge IoT Cores.” To accelerate development, Cores come with built-in machine learning, FaaS, data processing, messaging, API management, analytics, offloading capabilities to Google Cloud, and more.  The MACCHIATObin community board is using Marvell’s ARMADA 8040 processor and has a 64-bit ARMv8 quad-core processor core (running at up to 2.0GHZ), and supports up to 16GB of DDR4 memory and a wide array of different I/Os. Through use of Linux® on the Marvell MACCHIATObin board, the multifaceted Pixeom Edge IoT platform can facilitate implementation of edge computing servers (or cloudlets) at the periphery of the cloud network. Marvell will be able to show the power of this popular hardware platform to run advanced machine learning, data processing, and IoT functions as part of Pixeom’s demo. The role-based access features of the Pixeom Edge IoT platform also mean that developers situated in different locations can collaborate with one another in order to create compelling edge computing implementations. Pixeom supplies all the edge computing support needed to allow Marvell embedded processors users to establish their own edge-based applications, thus offloading operations from the center of the network.

The MACCHIATObin community board is using Marvell’s ARMADA 8040 processor and has a 64-bit ARMv8 quad-core processor core (running at up to 2.0GHZ), and supports up to 16GB of DDR4 memory and a wide array of different I/Os. Through use of Linux® on the Marvell MACCHIATObin board, the multifaceted Pixeom Edge IoT platform can facilitate implementation of edge computing servers (or cloudlets) at the periphery of the cloud network. Marvell will be able to show the power of this popular hardware platform to run advanced machine learning, data processing, and IoT functions as part of Pixeom’s demo. The role-based access features of the Pixeom Edge IoT platform also mean that developers situated in different locations can collaborate with one another in order to create compelling edge computing implementations. Pixeom supplies all the edge computing support needed to allow Marvell embedded processors users to establish their own edge-based applications, thus offloading operations from the center of the network.  Marvell will also be demonstrating the compatibility of its technology with the Google Cloud platform, which enables the management and analysis of deployed edge computing resources at scale. Here, once again the MACCHIATObin board provides the hardware foundation needed by engineers, supplying them with all the processing, memory and connectivity required.

Marvell will also be demonstrating the compatibility of its technology with the Google Cloud platform, which enables the management and analysis of deployed edge computing resources at scale. Here, once again the MACCHIATObin board provides the hardware foundation needed by engineers, supplying them with all the processing, memory and connectivity required.

Those visiting Marvell’s suite at CES (Venetian, Level 3 - Murano 3304, 9th-12th January 2018, Las Vegas) will be able to see a series of different demonstrations of the MACCHIATObin community board running cloud workloads at the network edge. Make sure you come by!